Recently, I encountered another slow query issue that caught my attention. After a quick investigation, it turned out to be yet another case of MySQL’s optimizer making inaccurate estimations in its query planning. Here’s the problematic SQL:

select * from t_pay_record

WHERE

((

user_id = 'user_id1'

AND is_del = 0

))

ORDER BY

id DESC

LIMIT 20

This query took a whopping 20 minutes to return results! However, when we switched to a different user_id, the execution was lightning fast. From our production environment observations, most users experienced normal performance. We even tested with users who had similar data distribution patterns, and those queries ran smoothly too.

Let’s start by examining the EXPLAIN output for our original problematic SQL:

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+---------+---------+------+-------+----------+-------------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+---------+---------+------+-------+----------+-------------+

| 1 | SIMPLE | t_pay_record | NULL | index | idx_user_id,idx_user_status_pay,idx_user_id_trade_code_status_amount_create_time_is_del | PRIMARY | 8 | NULL | 22593 | 0.01 | Using where |

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+---------+---------+------+-------+----------+-------------+

Now, when we tested with users having similar data distribution but normal response times, we got different EXPLAIN results. Some showed:

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+---------------------------------------------------------+---------+------+-------+----------+-------------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+---------------------------------------------------------+---------+------+-------+----------+-------------+

| 1 | SIMPLE | t_pay_record | NULL | index | idx_user_id,idx_user_status_pay,idx_user_id_trade_code_status_amount_create_time_is_del | idx_user_id_trade_code_status_amount_create_time_is_del | 195 | NULL | 107561| 10.00| Using where |

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+---------------------------------------------------------+---------+------+-------+----------+-------------+

While others displayed:

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+-------------+---------+------+-------+----------+-------------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+-------------+---------+------+-------+----------+-------------+

| 1 | SIMPLE | t_pay_record | NULL | index | idx_user_id,idx_user_status_pay,idx_user_id_trade_code_status_amount_create_time_is_del | idx_user_id | 195 | NULL | 87514| 10.00| Using where |

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+-------------+---------+------+-------+----------+-------------+

Based on these observations, it’s clear that the query was using the wrong index. But why would MySQL choose an inappropriate index? This happens due to multiple interconnected factors, and this article will dive deep into analyzing these causes while providing practical solutions.

Analyzing MySQL Slow Queries#

In my previous articles, I’ve mentioned that SQL optimization typically relies on three essential tools:

EXPLAIN: This provides a surface-level analysis without actually executing the SQL. While it might not always be completely accurate or detailed, it can reveal critical issues.

PROFILING: Enabled with

set profiling = 1, this tool samples SQL execution and breaks down the query into different phases with their respective timing. It requires actual SQL execution and success, though the phase breakdown isn’t always granular enough. It’s mainly useful for identifying and avoiding certain phases (like preventing in-memory sorting).OPTIMIZER TRACE: This tool provides a detailed view of every step the optimizer takes, requiring actual SQL execution and success. MySQL’s optimizer considers numerous factors through multiple iterations, making its configuration quite complex. While default settings work fine in most scenarios, special cases require manual intervention.

It’s worth noting that across different MySQL versions, EXPLAIN and OPTIMIZER TRACE results may vary due to inherent design limitations in MySQL. EXPLAIN tends to be closer to the actual execution result, while OPTIMIZER TRACE acts like checkpoint sampling throughout the process. During MySQL’s continuous development iterations, some inconsistencies are inevitable.

For our specific SQL case, EXPLAIN already reveals that it’s using the wrong index. However, to understand why this happens, we need to dig deeper with OPTIMIZER TRACE. Before we proceed with that analysis, let me explain MySQL’s InnoDB query optimizer statistics configuration.

MySQL InnoDB Optimizer Statistics Configuration#

Official documentation: https://dev.mysql.com/doc/refman/8.0/en/innodb-persistent-stats.html

To optimize user SQL queries, MySQL performs SQL parsing, rewriting, and query plan optimization. For the InnoDB engine, creating a query plan involves analyzing:

- The cost of a full table scan

- Which indexes are available for the WHERE and ORDER BY conditions

- The query cost for each potential index

- Selecting and executing the plan with the lowest cost

Each index’s query cost is determined through InnoDB optimizer statistics. This data is collected by sampling table and index data—not through complete collection, but through statistical sampling. Several configuration parameters control this process:

innodb_stats_persistent: This global variable controls whether statistics are persisted by default (ON by default). We generally can’t accept in-memory storage since database restarts would require table re-analysis, slowing startup times. Individual table control usesSTATS_PERSISTENT(inCREATE TABLEandALTER TABLEstatements).innodb_stats_auto_recalc: This global variable controls automatic updates by default (ON by default), triggering background asynchronous updates when more than 10% of table rows are modified. Individual table control usesSTATS_AUTO_RECALC(inCREATE TABLEandALTER TABLEstatements).innodb_stats_persistent_sample_pages: This global variable controls the number of pages sampled by default (20 by default). Each update randomly samples 20 pages from the table and each index to estimate query costs for each index and full table scans. Individual table control usesSTATS_SAMPLE_PAGES(inCREATE TABLEandALTER TABLEstatements).

Root Cause Analysis of the Slowest SQL Execution#

From our previous EXPLAIN results, we know the final query used the PRIMARY key index. This means the entire SQL execution process involved: traversing each row in the table in reverse primary key order until finding 20 matching records. Given the execution time, we know this process examined many records before collecting 20 matches, making it extremely inefficient. But why did this happen?

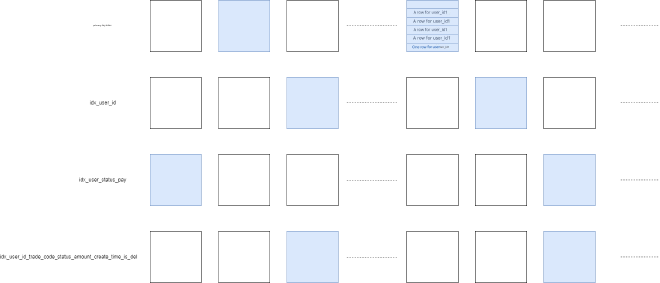

Looking at our SQL statement, in the second step mentioned earlier, the considered indexes include those related to user_id and is_del from the WHERE conditions (as shown in EXPLAIN: idx_user_id,idx_user_status_pay,idx_user_id_trade_code_status_amount_create_time_is_del), as well as the id index from the ORDER BY condition (the primary key index). Suppose the randomly sampled pages looked like this:

The blue sections represent sampled pages, with each index in the table sampling 20 pages by default. Assuming our sampling results match the diagram, other indexes were sampled relatively evenly, leading the optimizer to estimate that using other indexes would require scanning tens of thousands of rows. However, the last page sampled from the primary key happened to contain all records for this specific user at the end. Since the statement includes LIMIT 20, if there happen to be 20 records at the end (all meeting the WHERE conditions), the optimizer would conclude that scanning backwards through the primary key for 20 records would be most efficient. This causes the optimizer to believe primary key scanning has the lowest cost. In reality, this isn’t true because sampling data doesn’t represent the full picture—there might be many, many records that don’t belong to this user afterward, especially in large tables.

If we remove the LIMIT clause, EXPLAIN shows the correct index is chosen, because without limiting results, the primary key index would need to scan the entire table, making it impossible to have lower cost than user_id-related indexes.

Why Different user_ids with Normal Execution Times Show Different Index Choices#

Similarly, since all index optimizer statistics are randomly sampled, as tables grow larger and indexes expand, plus the potential addition of more complex indexes, this amplifies the variance in analyzing index costs with different parameters (different user_ids in our case).

This brings up another issue you might encounter: when adding a composite index on top of existing indexes (for example, originally having only idx_user_id, then adding idx_user_status_pay), SQL queries that previously only searched by user_id might sometimes use idx_user_id and sometimes use idx_user_status_pay. Using idx_user_status_pay will likely be slower than using idx_user_id. Therefore, adding new composite indexes might slow down other business SQL queries that weren’t meant to be optimized by the composite index, so this should be done carefully.

Problems This Design Creates as Data Volume Grows and Tables Become More Complex#

Since statistics aren’t updated in real-time but only when modified rows exceed a certain percentage, and statistics are sampled rather than comprehensive, these statistics can be quite inaccurate when table data volumes are large.

Since statistics are inherently inaccurate, complex table designs with diverse data types, many fields, and especially various composite indexes make statistics even more inaccurate.

As a side note: MySQL tables shouldn’t be too large and need proper horizontal partitioning, while fields shouldn’t be too numerous, requiring good vertical partitioning. Indexes shouldn’t be added carelessly—adding too many exacerbates statistical inaccuracy, leading to wrong index choices.

Manual ANALYZE TABLE adds read locks to tables, blocking updates and transactions. This can’t be used on critical online business tables. Consider scheduling ANALYZE for critical business tables during low-traffic periods.

Relying on automatic table refresh mechanisms makes parameters difficult to adjust (mainly the

STATS_SAMPLE_PAGESparameter—we generally won’t changeSTATS_PERSISTENTsince we can’t accept in-memory storage due to restart delays, and we won’t disableSTATS_AUTO_RECALCas it would make optimizer analysis increasingly inaccurate). It’s hard to predict optimal values. Business growth and user behavior causing data skew are also unpredictable. Using ALTER TABLE to modify a specific table’sSTATS_SAMPLE_PAGEShas the same effect as ANALYZE TABLE—adding read locks and blocking updates and transactions. This can’t be used on critical online business tables, so it’s best to estimate large table scales from the beginning, though this is challenging.

Conclusions and Recommendations#

In summary, for production tables with large data volumes, I recommend proactively controlling each table’s data volume through database and table partitioning. However, business growth and product requirements continuously iterate and become more complex, making it difficult to guarantee we won’t end up with large tables having complex indexes. In such situations, we need to appropriately increase STATS_SAMPLE_PAGES while using FORCE INDEX to guide critical user-triggered queries toward the correct indexes. This prevents the issues described in this article where inaccurate MySQL optimizer statistics cause certain user IDs to use wrong indexes.