Issue Description and Background#

Last night, our gateway experienced an avalanche for a period of time. The symptoms were as follows:

1. Various microservices continuously reported exceptions: connection closed while writing HTTP response:

reactor.netty.http.client.PrematureCloseException: Connection prematurely closed BEFORE response

2. Simultaneously, there were exceptions where requests hadn’t finished reading but connections were already closed:

org.springframework.http.converter.HttpMessageNotReadableException: I/O error while reading input message; nested exception is java.io.IOException: UT000128: Remote peer closed connection before all data could be read

3. Frontend continuously triggered request timeout alerts: 504 Gateway Time-out

4. Gateway processes kept failing health checks and being restarted

5. After restart, gateway processes immediately experienced surging request volumes - peak 2000 qps per instance, 500 qps during quiet periods, and normally maintained under 1000 qps per instance during busy times due to auto-scaling. However, health check endpoints took extremely long to respond, causing instances to restart continuously

Issues 1 and 2 were likely caused by the gateway’s continuous restarts and failed graceful shutdowns for some reason, leading to forced shutdowns that abruptly terminated connections, resulting in the related exceptions.

Our gateway is built on Spring Cloud Gateway with automatic CPU load-based scaling. Strangely, when request volumes spiked, CPU utilization didn’t increase significantly, staying around 60%. Since CPU load didn’t reach the scaling threshold, auto-scaling never triggered. To quickly resolve the issue, we manually scaled up several gateway instances, controlling the load to under 1000 per instance, which temporarily solved the problem.

Problem Analysis#

To thoroughly resolve this issue, we used JFR analysis. First, we analyzed based on known clues:

- Spring Cloud Gateway is an asynchronous reactive gateway based on Spring-WebFlux, with limited HTTP business threads (default is 2 * available CPU cores, which is 4 in our case).

- Gateway processes continuously failed health checks, which called the /actuator/health endpoint that kept timing out.

Health check endpoint timeouts generally have two causes:

- The health check interface gets blocked while checking certain components. For example, if the database gets stuck, database health checks might never return.

- The HTTP thread pool doesn’t get to process health check requests before they timeout.

We first examined the timed stack traces in JFR to see if HTTP threads were stuck on health checks. Looking at the thread stacks after the issue occurred, focusing on those 4 HTTP threads, we found they all had basically identical stacks, all executing Redis commands:

"reactor-http-nio-1" #68 daemon prio=5 os_prio=0 cpu=70832.99ms elapsed=199.98s tid=0x0000ffffb2f8a740 nid=0x69 waiting on condition [0x0000fffe8adfc000]

java.lang.Thread.State: TIMED_WAITING (parking)

at jdk.internal.misc.Unsafe.park([email protected]/Native Method)

- parking to wait for <0x00000007d50eddf8> (a java.util.concurrent.CompletableFuture$Signaller)

at java.util.concurrent.locks.LockSupport.parkNanos([email protected]/LockSupport.java:234)

at java.util.concurrent.CompletableFuture$Signaller.block([email protected]/CompletableFuture.java:1798)

at java.util.concurrent.ForkJoinPool.managedBlock([email protected]/ForkJoinPool.java:3128)

at java.util.concurrent.CompletableFuture.timedGet([email protected]/CompletableFuture.java:1868)

at java.util.concurrent.CompletableFuture.get([email protected]/CompletableFuture.java:2021)

at io.lettuce.core.protocol.AsyncCommand.await(AsyncCommand.java:83)

at io.lettuce.core.internal.Futures.awaitOrCancel(Futures.java:244)

at io.lettuce.core.FutureSyncInvocationHandler.handleInvocation(FutureSyncInvocationHandler.java:75)

at io.lettuce.core.internal.AbstractInvocationHandler.invoke(AbstractInvocationHandler.java:80)

at com.sun.proxy.$Proxy245.get(Unknown Source)

at org.springframework.data.redis.connection.lettuce.LettuceStringCommands.get(LettuceStringCommands.java:68)

at org.springframework.data.redis.connection.DefaultedRedisConnection.get(DefaultedRedisConnection.java:267)

at org.springframework.data.redis.connection.DefaultStringRedisConnection.get(DefaultStringRedisConnection.java:406)

at org.springframework.data.redis.core.DefaultValueOperations$1.inRedis(DefaultValueOperations.java:57)

at org.springframework.data.redis.core.AbstractOperations$ValueDeserializingRedisCallback.doInRedis(AbstractOperations.java:60)

at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:222)

at org.springframework.data.redis.core.RedisTemplate.execute(RedisTemplate.java:189)

at org.springframework.data.redis.core.AbstractOperations.execute(AbstractOperations.java:96)

at org.springframework.data.redis.core.DefaultValueOperations.get(DefaultValueOperations.java:53)

at com.jojotech.apigateway.filter.AccessCheckFilter.traced(AccessCheckFilter.java:196)

at com.jojotech.apigateway.filter.AbstractTracedFilter.filter(AbstractTracedFilter.java:39)

at org.springframework.cloud.gateway.handler.FilteringWebHandler$GatewayFilterAdapter.filter(FilteringWebHandler.java:137)

at org.springframework.cloud.gateway.filter.OrderedGatewayFilter.filter(OrderedGatewayFilter.java:44)

at org.springframework.cloud.gateway.handler.FilteringWebHandler$DefaultGatewayFilterChain.lambda$filter$0(FilteringWebHandler.java:117)

at org.springframework.cloud.gateway.handler.FilteringWebHandler$DefaultGatewayFilterChain$$Lambda$1478/0x0000000800b84c40.get(Unknown Source)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:44)

at reactor.core.publisher.Mono.subscribe(Mono.java:4150)

at com.jojotech.apigateway.common.TracedMono.subscribe(TracedMono.java:24)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.Mono.subscribe(Mono.java:4150)

at com.jojotech.apigateway.common.TracedMono.subscribe(TracedMono.java:24)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.Mono.subscribe(Mono.java:4150)

at com.jojotech.apigateway.common.TracedMono.subscribe(TracedMono.java:24)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.Mono.subscribe(Mono.java:4150)

at com.jojotech.apigateway.common.TracedMono.subscribe(TracedMono.java:24)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.Mono.subscribe(Mono.java:4150)

at com.jojotech.apigateway.common.TracedMono.subscribe(TracedMono.java:24)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.Mono.subscribe(Mono.java:4150)

at reactor.core.publisher.MonoIgnoreThen$ThenIgnoreMain.subscribeNext(MonoIgnoreThen.java:255)

at reactor.core.publisher.MonoIgnoreThen.subscribe(MonoIgnoreThen.java:51)

at reactor.core.publisher.MonoFlatMap$FlatMapMain.onNext(MonoFlatMap.java:157)

at reactor.core.publisher.FluxSwitchIfEmpty$SwitchIfEmptySubscriber.onNext(FluxSwitchIfEmpty.java:73)

at reactor.core.publisher.MonoNext$NextSubscriber.onNext(MonoNext.java:82)

at reactor.core.publisher.FluxConcatMap$ConcatMapImmediate.innerNext(FluxConcatMap.java:281)

at reactor.core.publisher.FluxConcatMap$ConcatMapInner.onNext(FluxConcatMap.java:860)

at reactor.core.publisher.FluxMap$MapSubscriber.onNext(FluxMap.java:120)

at reactor.core.publisher.FluxSwitchIfEmpty$SwitchIfEmptySubscriber.onNext(FluxSwitchIfEmpty.java:73)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1815)

at reactor.core.publisher.MonoFlatMap$FlatMapMain.onNext(MonoFlatMap.java:151)

at reactor.core.publisher.FluxMap$MapSubscriber.onNext(FluxMap.java:120)

at reactor.core.publisher.MonoNext$NextSubscriber.onNext(MonoNext.java:82)

at reactor.core.publisher.FluxConcatMap$ConcatMapImmediate.innerNext(FluxConcatMap.java:281)

at reactor.core.publisher.FluxConcatMap$ConcatMapInner.onNext(FluxConcatMap.java:860)

at reactor.core.publisher.FluxOnErrorResume$ResumeSubscriber.onNext(FluxOnErrorResume.java:79)

at reactor.core.publisher.MonoPeekTerminal$MonoTerminalPeekSubscriber.onNext(MonoPeekTerminal.java:180)

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1815)

at reactor.core.publisher.MonoFilterWhen$MonoFilterWhenMain.onNext(MonoFilterWhen.java:149)

at reactor.core.publisher.Operators$ScalarSubscription.request(Operators.java:2397)

at reactor.core.publisher.MonoFilterWhen$MonoFilterWhenMain.onSubscribe(MonoFilterWhen.java:112)

at reactor.core.publisher.MonoJust.subscribe(MonoJust.java:54)

at reactor.core.publisher.Mono.subscribe(Mono.java:4150)

at reactor.core.publisher.FluxConcatMap$ConcatMapImmediate.drain(FluxConcatMap.java:448)

at reactor.core.publisher.FluxConcatMap$ConcatMapImmediate.onNext(FluxConcatMap.java:250)

at reactor.core.publisher.FluxDematerialize$DematerializeSubscriber.onNext(FluxDematerialize.java:98)

at reactor.core.publisher.FluxDematerialize$DematerializeSubscriber.onNext(FluxDematerialize.java:44)

at reactor.core.publisher.FluxIterable$IterableSubscription.slowPath(FluxIterable.java:270)

at reactor.core.publisher.FluxIterable$IterableSubscription.request(FluxIterable.java:228)

at reactor.core.publisher.FluxDematerialize$DematerializeSubscriber.request(FluxDematerialize.java:127)

at reactor.core.publisher.FluxConcatMap$ConcatMapImmediate.onSubscribe(FluxConcatMap.java:235)

at reactor.core.publisher.FluxDematerialize$DematerializeSubscriber.onSubscribe(FluxDematerialize.java:77)

at reactor.core.publisher.FluxIterable.subscribe(FluxIterable.java:164)

at reactor.core.publisher.FluxIterable.subscribe(FluxIterable.java:86)

at reactor.core.publisher.InternalFluxOperator.subscribe(InternalFluxOperator.java:62)

at reactor.core.publisher.FluxDefer.subscribe(FluxDefer.java:54)

at reactor.core.publisher.Mono.subscribe(Mono.java:4150)

at reactor.core.publisher.FluxConcatMap$ConcatMapImmediate.drain(FluxConcatMap.java:448)

at reactor.core.publisher.FluxConcatMap$ConcatMapImmediate.onSubscribe(FluxConcatMap.java:218)

at reactor.core.publisher.FluxIterable.subscribe(FluxIterable.java:164)

at reactor.core.publisher.FluxIterable.subscribe(FluxIterable.java:86)

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at org.springframework.cloud.sleuth.instrument.web.TraceWebFilter$MonoWebFilterTrace.subscribe(TraceWebFilter.java:184)

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64)

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52)

at reactor.core.publisher.Mono.subscribe(Mono.java:4150)

at reactor.core.publisher.MonoIgnoreThen$ThenIgnoreMain.subscribeNext(MonoIgnoreThen.java:255)

at reactor.core.publisher.MonoIgnoreThen.subscribe(MonoIgnoreThen.java:51)

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64)

at reactor.netty.http.server.HttpServer$HttpServerHandle.onStateChange(HttpServer.java:915)

at reactor.netty.ReactorNetty$CompositeConnectionObserver.onStateChange(ReactorNetty.java:654)

at reactor.netty.transport.ServerTransport$ChildObserver.onStateChange(ServerTransport.java:478)

at reactor.netty.http.server.HttpServerOperations.onInboundNext(HttpServerOperations.java:526)

at reactor.netty.channel.ChannelOperationsHandler.channelRead(ChannelOperationsHandler.java:94)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at reactor.netty.http.server.HttpTrafficHandler.channelRead(HttpTrafficHandler.java:209)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at reactor.netty.http.server.logging.AccessLogHandlerH1.channelRead(AccessLogHandlerH1.java:59)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.channel.CombinedChannelDuplexHandler$DelegatingChannelHandlerContext.fireChannelRead(CombinedChannelDuplexHandler.java:436)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:324)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:296)

at io.netty.channel.CombinedChannelDuplexHandler.channelRead(CombinedChannelDuplexHandler.java:251)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:357)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1410)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:379)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:365)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:919)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:166)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:719)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:655)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:581)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:493)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run([email protected]/Thread.java:834)

We discovered that HTTP threads weren’t stuck on health checks, and no other threads had any health check-related stacks (in an async environment, health checks are also asynchronous, with some processes potentially handed off to other threads). Therefore, health check requests should have timed out before being executed.

Why would this happen? At the same time, I noticed that RedisTemplate was being used here - the synchronous Redis API from spring-data-redis. I suddenly remembered that when writing this code previously, I had taken a shortcut and didn’t use the async API since I was only verifying key existence and modifying key expiration times. Could this be causing the avalanche by blocking HTTP threads with synchronous APIs?

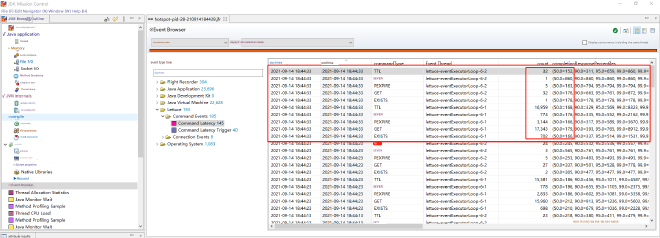

Let’s verify this hypothesis: Our project uses Redis operations through spring-data-redis + Lettuce connection pool, with enhanced JFR monitoring for Lettuce commands enabled. You can refer to my article: “This New Redis Connection Pool Monitoring Method is Awesome - Let Me Add Some Extra Seasoning”. As of now, my pull request has been merged, and this feature will be released in version 6.2.x. Let’s look at the Redis command collection around the time of the issue:

Let’s calculate the blocking time caused by executing Redis commands (our collection is every 10s, count is the number of commands, time unit is microseconds): Using the command count here multiplied by the 50% median, divided by 10 (since it’s 10s), we get the blocking time per second caused by executing Redis commands:

32*152=4864

1*860=860

5*163=815

32*176=5632

1*178=178

16959*168=2849112

774*176=136224

3144*166=521904

17343*179=3104397

702*166=116532

Total: 6740518

6740518 / 10 = 674051.8 us = 0.67s

This is just the blocking time calculated using the median. From the distribution in the graph, we can see that the actual value should be larger. This way, the time needed to block on Redis synchronous interfaces per second could easily exceed 1s. With continuous requests that don’t decrease, requests keep accumulating, eventually leading to an avalanche.

Moreover, since these are blocking interfaces, threads spend a lot of time waiting for I/O, so CPU usage doesn’t increase, preventing auto-scaling. During business peak hours, due to pre-configured scaling, gateway instances didn’t reach the problematic pressure levels, so there were no issues.

Problem Resolution#

Let’s rewrite the original code. The original code using synchronous spring-data-redis API was (essentially the method body of the core method public Mono<Void> traced(ServerWebExchange exchange, GatewayFilterChain chain) in the spring-cloud-gateway Filter interface):

if (StringUtils.isBlank(token)) {

//If token doesn't exist, decide whether to continue the request or return unauthorized status based on path

return continueOrUnauthorized(path, exchange, chain, headers);

} else {

try {

String accessTokenValue = redisTemplate.opsForValue().get(token);

if (StringUtils.isNotBlank(accessTokenValue)) {

//If accessTokenValue is not empty, extend for 4 hours to ensure logged-in users don't have tokens expire as long as they have activity

Long expire = redisTemplate.getExpire(token);

log.info("accessTokenValue = {}, expire = {}", accessTokenValue, expire);

if (expire != null && expire < 4 * 60 * 60) {

redisTemplate.expire(token, 4, TimeUnit.HOURS);

}

//Parse to get userId

JSONObject accessToken = JSON.parseObject(accessTokenValue);

String userId = accessToken.getString("userId");

//Only valid if userId is not empty

if (StringUtils.isNotBlank(userId)) {

//Parse Token

HttpHeaders newHeaders = parse(accessToken);

//Continue request

return FilterUtil.changeRequestHeader(exchange, chain, newHeaders);

}

}

} catch (Exception e) {

log.error("read accessToken error: {}", e.getMessage(), e);

}

//If token is invalid, decide whether to continue request or return unauthorized status based on path

return continueOrUnauthorized(path, exchange, chain, headers);

}

Converted to use async:

if (StringUtils.isBlank(token)) {

return continueOrUnauthorized(path, exchange, chain, headers);

} else {

HttpHeaders finalHeaders = headers;

//Must wrap with tracedPublisherFactory, otherwise trace information will be lost. Refer to my other article: Spring Cloud Gateway Has No Trace Information, I'm Completely Confused

return tracedPublisherFactory.getTracedMono(

redisTemplate.opsForValue().get(token)

//Must switch threads, otherwise subsequent threads will still use Redisson's thread. If time-consuming, it will affect other Redis-using business and this time consumption will also count towards Redis connection command timeout

.publishOn(Schedulers.parallel()),

exchange

).doOnSuccess(accessTokenValue -> {

if (accessTokenValue != null) {

//AccessToken renewal, 4 hours

tracedPublisherFactory.getTracedMono(redisTemplate.getExpire(token).publishOn(Schedulers.parallel()), exchange).doOnSuccess(expire -> {

log.info("accessTokenValue = {}, expire = {}", accessTokenValue, expire);

if (expire != null && expire.toHours() < 4) {

redisTemplate.expire(token, Duration.ofHours(4)).subscribe();

}

}).subscribe();

}

})

//Must convert to non-null, otherwise flatmap won't execute; also can't use switchIfEmpty at the end because the overall return is Mono<Void> which carries empty content anyway, causing each request to be sent twice.

.defaultIfEmpty("")

.flatMap(accessTokenValue -> {

try {

if (StringUtils.isNotBlank(accessTokenValue)) {

JSONObject accessToken = JSON.parseObject(accessTokenValue);

String userId = accessToken.getString("userId");

if (StringUtils.isNotBlank(userId)) {

//Parse Token

HttpHeaders newHeaders = parse(accessToken);

//Continue request

return FilterUtil.changeRequestHeader(exchange, chain, newHeaders);

}

}

return continueOrUnauthorized(path, exchange, chain, finalHeaders);

} catch (Exception e) {

log.error("read accessToken error: {}", e.getMessage(), e);

return continueOrUnauthorized(path, exchange, chain, finalHeaders);

}

});

}

Here are several key points to note:

- Spring-Cloud-Sleuth prioritizes tracing in Spring-WebFlux. If we create new Flux or Mono in Filters, there’s no trace information inside, requiring manual addition. This can be referenced in my other article: Spring Cloud Gateway Has No Trace Information, I’m Completely Confused

- For the spring-data-redis + Lettuce connection pool combination, for async interfaces, we should switch to different thread pools after getting responses. Otherwise, subsequent threads will still use Redisson’s thread, and if time-consuming, it will affect other Redis-using business, with this time consumption also counting towards Redis connection command timeout

- Project Reactor doesn’t execute subsequent flatmap, map, and other stream operations if intermediate results have null values. If terminated here, the frontend receives problematic responses. So we need to consider null issues at every step of intermediate results.

- The core GatewayFilter interface in spring-cloud-gateway returns

Mono<Void>from its core method. Monocarries empty content by nature, preventing us from using switchIfEmpty at the end to simplify null handling in intermediate steps. Using it would cause each request to be sent twice.

After this modification, stress testing the gateway showed that even 20k qps per single instance didn’t reproduce this issue.