Tackling Performance Degradation in Sharded MySQL Tables: Understanding the Root Cause and Solutions#

When business requirements demand data query volumes and concurrent loads that exceed the limits of a single MySQL instance, database sharding becomes the go-to solution. Of course, there are now many NewSQL distributed database options available. If you’re using MySQL, you might want to consider TiDB (which implements the MySQL protocol and is compatible with MySQL clients and SQL statements). If you’re working with PostgreSQL, YugaByteDB could be your solution (implementing the PostgreSQL protocol with full client and SQL compatibility). Both platforms offer their own cloud deployment solutions that you can explore:

However, traditional sharding projects still rely on conventional relational databases like MySQL and PostgreSQL as their foundation. Typically, when a business starts out, teams will consider partitioning data across multiple tables using a specific shard key. Take order tables, for example - we might estimate that users primarily need to query order records from the past year. For orders older than a year, we’d provide alternative access points that query archived databases like HBase rather than the operational business database.

Let’s say we estimate that user orders within a year won’t exceed 1 billion records, with update concurrency (TPS, not query QPS) staying below 100,000/s. In this case, we might consider splitting into 64 tables (preferably using powers of 2, since modulo operations with 2^n can be optimized to bitwise AND operations with 2^n - 1, reducing computational overhead for shard key calculations). We’d also implement regular archival processes to remove year-old data using statements like delete from table for “complete deletion” (note the quotes around deletion). This approach maintains the business table data volume at manageable levels.

However, as time passes, you’ll notice that certain queries with shard keys (in this case, user_id) start slowing down, with some incorrectly choosing local indexes.

Why Queries Get Progressively Slower#

Consider this SQL example:

select * from t_pay_record

WHERE

((

user_id = 'user_id1'

AND is_del = 0

))

ORDER BY

id DESC

LIMIT 20

Here, the shard key is user_id.

One factor is that data volumes might exceed our expectations, causing certain sharded tables to grow beyond optimal thresholds. This leads to increasingly inaccurate random sampling for MySQL indexes. Statistics aren’t updated in real-time but only when the number of updated rows exceeds a certain percentage. Moreover, these statistics use sampling rather than full table analysis. When tables become very large, maintaining accurate statistics becomes challenging.

Relying on the table’s automatic refresh mechanism makes parameter tuning difficult (primarily the STATS_SAMPLE_PAGES parameter - we typically don’t modify STATS_PERSISTENT since in-memory storage would require table re-analysis after database restarts, slowing startup times, and we don’t disable STATS_AUTO_RECALC as this would make optimizer analysis increasingly inaccurate). Predicting optimal values is nearly impossible, and business growth combined with user behavior patterns can cause unpredictable data skew.

When modifying a table’s STATS_SAMPLE_PAGES via ALTER TABLE, it triggers the same effect as running ANALYZE on the table, acquiring read locks that block updates and transactions. This makes it unsuitable for critical online business tables. Ideally, we should estimate large table volumes from the start, but this proves quite challenging in practice.

Therefore, for tables with substantial data volumes, we should proactively control individual table sizes through sharding. However, business growth and product requirements continuously evolve and become more complex, making it difficult to guarantee we won’t encounter large tables with complex indexing needs. In such situations, we need to appropriately increase STATS_SAMPLE_PAGES and use force index to guide critical user-triggered queries toward the correct indexes.

But sometimes, even when the correct index is used, queries remain somewhat slow. Upon examining the number of rows scanned by the SQL, you’ll find it’s not particularly high.

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+-------------+---------+------+-------+----------+-------------+

| id | select_type | table | partitions | type | possible_keys | key | key_len | ref | rows | filtered | Extra |

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+-------------+---------+------+-------+----------+-------------+

| 1 | SIMPLE | t_pay_record | NULL | index | idx_user_id,idx_user_status_pay,idx_user_id_trade_code_status_amount_create_time_is_del | idx_user_id | 32 | NULL | 16 | 0.01 | Using where |

+----+-------------+--------------+------------+-------+-----------------------------------------------------------------------------------------+-------------+---------+------+-------+----------+-------------+

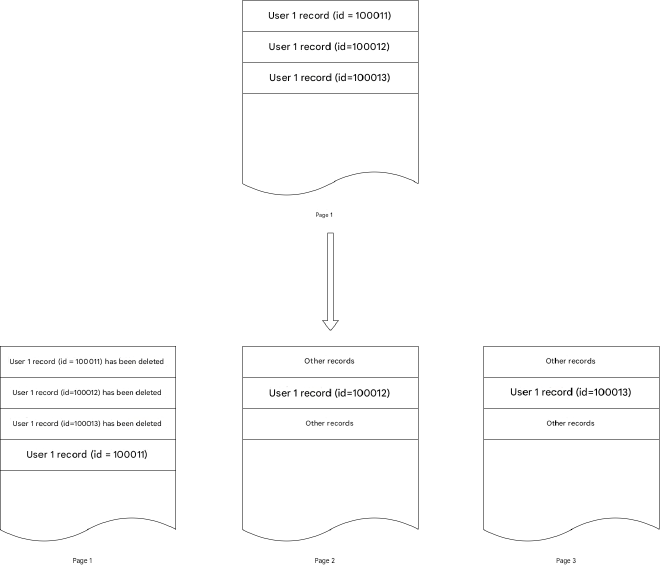

You might still encounter occasional slow SQL queries that become more frequent over time. This relates to MySQL InnoDB’s deletion mechanism. Most business tables use the InnoDB engine with the default Dynamic row format. When inserting data in this format, the structure looks roughly like this:

The record header contains a deletion flag:

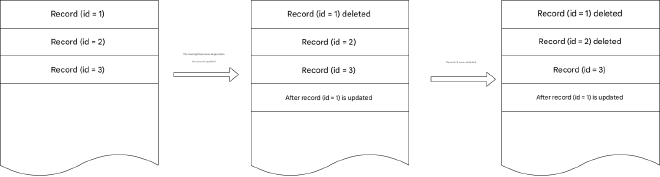

When updates occur that change record length (such as variable-length fields becoming longer), the original record gets marked for deletion, and an updated record is created at the end. When deleting a record, only the deletion flag in the record header gets marked.

To address potential fragmentation, MySQL InnoDB has expectations and measures in place: the InnoDB engine only stores data occupying 93% of each page’s space, leaving the remainder to accommodate length-changing updates without forcing data to other pages. However, deletions essentially waste storage space completely.

Typically, this doesn’t cause significant performance degradation because deletions usually target older data while updates concentrate on recent data. For instance, order updates generally affect recent orders, with older orders rarely being updated, and archived deletions typically target much older orders. However, as business logic becomes more complex, archival logic also grows more intricate. Different order types might have varying retention periods - perhaps year-old pre-orders remain unsettled and can’t be archived. Over time, your data might look like this:

This causes what originally required scanning few pages to progressively require scanning more pages as fragmentation increases, making SQL execution increasingly slow.

The above describes impacts on table data storage itself. For secondary indexes, the MVCC mechanism means frequent updates to indexed fields also create many gaps in indexes. Reference documentation: https://dev.mysql.com/doc/refman/8.0/en/innodb-multi-versioning.html

InnoDB multiversion concurrency control (MVCC) treats secondary indexes differently than clustered indexes. Records in a clustered index are updated in-place, and their hidden system columns point undo log entries from which earlier versions of records can be reconstructed. Unlike clustered index records, secondary index records do not contain hidden system columns nor are they updated in-place.

We know that MySQL InnoDB updates clustered indexes in-place, but for secondary indexes, when secondary index columns are updated, the original record gets marked for deletion and a new record is created elsewhere. This creates storage fragmentation similar to what we described earlier.

In summary:

- MySQL InnoDB’s Dynamic row format record updates that change record length, along with DELETE statements, actually mark original records for deletion. While MySQL InnoDB optimizes this with reserved space, accumulated archival deletions over time create memory fragmentation that reduces scanning efficiency.

- The MVCC mechanism for secondary index column updates marks original records for deletion and creates new records elsewhere, causing secondary index scanning efficiency to degrade over time.

Solution - Table Rebuilding#

For this situation, we can resolve it through table rebuilding. Rebuilding tables actually achieves two goals: first, it optimizes storage fragmentation and reduces rows to scan; second, it re-analyzes data for more accurate SQL optimizer statistics.

Before MySQL 5.6.17, we needed external tools like pt-online-schema-change to help rebuild tables. This tool works by internally creating new tables, adding triggers on original tables to synchronize updates to new tables while copying data to new tables, then acquiring global locks to rename new tables to original names before deleting original tables. After MySQL 5.6.17, the OPTIMIZE TABLE command became an Online DDL operation, requiring locks only during preparation and final commit phases, not during execution - meaning it won’t block business DML operations. Reference documentation: https://dev.mysql.com/doc/refman/5.6/en/optimize-table.html

Prior to Mysql 5.6.17, OPTIMIZE TABLE does not use online DDL. Consequently, concurrent DML (INSERT, UPDATE, DELETE) is not permitted on a table while OPTIMIZE TABLE is running, and secondary indexes are not created as efficiently.

As of MySQL 5.6.17, OPTIMIZE TABLE uses online DDL for regular and partitioned InnoDB tables, which reduces downtime for concurrent DML operations. The table rebuild triggered by OPTIMIZE TABLE is completed in place. An exclusive table lock is only taken briefly during the prepare phase and the commit phase of the operation. During the prepare phase, metadata is updated and an intermediate table is created. During the commit phase, table metadata changes are committed.

Key considerations when using OPTIMIZE TABLE on InnoDB tables:

- For most InnoDB tables, OPTIMIZE TABLE essentially equals table rebuilding + ANALYZE command (equivalent to

ALTER TABLE ... FORCE), but unlike ANALYZE, OPTIMIZE TABLE is an online DDL with optimized mechanisms that only acquire table locks during preparation and final commit phases, greatly reducing business DML blocking time - making it a viable optimization statement for online execution (for MySQL 5.6.17 and later)

mysql> OPTIMIZE TABLE foo;

+----------+----------+----------+-------------------------------------------------------------------+

| Table | Op | Msg_type | Msg_text |

+----------+----------+----------+-------------------------------------------------------------------+

| test.foo | optimize | note | Table does not support optimize, doing recreate + analyze instead |

| test.foo | optimize | status | OK |

+----------+----------+----------+-------------------------------------------------------------------+

- Nevertheless, execute OPTIMIZE TABLE during low business traffic periods. Like other Online DDL operations, it creates and logs temporary files recording all DML inserts, updates, and deletes during DDL operations. Running during peak traffic might cause logs to exceed

innodb_online_alter_log_max_sizelimits:

mysql> OPTIMIZE TABLE foo;

+----------+----------+----------+----------------------------------------------------------------------------------------------------------------------------+

| Table | Op | Msg_type | Msg_text |

+----------+----------+----------+----------------------------------------------------------------------------------------------------------------------------+

| test.foo | optimize | note | Table does not support optimize, doing recreate + analyze instead |

| test.foo | optimize | error | Creating index 'PRIMARY' required more than 'innodb_online_alter_log_max_size' bytes of modification log. Please try again.|

| test.foo | optimize | status | OK |

+----------+----------+----------+----------------------------------------------------------------------------------------------------------------------------+

If you encounter this error during low traffic periods, you can slightly increase

innodb_online_alter_log_max_size, but don’t make it too large - recommend increasing by 128 MB increments (default is 128 MB). Excessive size can cause two problems: (1) Extended commit times during final phases due to large logs, causing prolonged locking. (2) Continuous writes to temporary files from business pressure that never catch up, causing statements to still execute during peak traffic.When evaluating impact on online business, monitor locks

wait/synch/sxlock/innodb/dict_sys_lockandwait/synch/sxlock/innodb/dict_operation_lock. If these lock-related events become excessive and you notice obvious slow SQL queries online, consider killing the operation and rescheduling OPTIMIZE TABLE for another time.

select thread_id,event_id,event_name,timer_wait from events_waits_history where event_name Like "%dict%" order by thread_id;

SELECT event_name,COUNT_STAR FROM events_waits_summary_global_by_event_name

where event_name Like "%dict%" ORDER BY COUNT_STAR DESC;