This article provides a detailed analysis of JVM memory structure. While there are many online resources about JVM memory structure with accompanying diagrams, much of the information contains errors due to being second-hand or simply repeating others’ work, leading to widespread misconceptions. Additionally, confusion often arises from mixing JVM Specification definitions with actual Hotspot JVM implementations - sometimes people discuss JVM Specification while other times they refer to Hotspot implementation details, creating a fragmented understanding.

This article primarily focuses on Hotspot implementation in Linux x86 environments, closely examining JVM source code and using various JVM tools for verification to help readers understand JVM memory structure. However, this article is limited to analyzing the purpose, usage limitations, and related parameters of these memory areas. Some sections may be quite in-depth, while others may require integration with JVM modules that use these memory areas, which will be described in detail in another series of articles.

1. Starting with Native Memory Tracking#

What exactly does JVM memory include? There are various opinions online. Let’s introduce this topic through an official tool for viewing JVM memory usage: Native Memory Tracking. It’s important to note that this tool can only monitor the size of memory natively allocated by the JVM. If memory is allocated through JDK-wrapped system APIs, it won’t be tracked - for example, DirectBuffer and MappedByteBuffer in Java JDK (though we have other ways to view their current usage). Similarly, if you wrap JNI calls to system calls for memory allocation, these are all beyond Native Memory Tracking’s scope.

1.1. Enabling Native Memory Tracking#

Native Memory Tracking works by implementing instrumentation when JVM requests memory from the system. Note that this instrumentation is not without cost, as we’ll see later. Due to the need for instrumentation and the many places where memory is allocated in the JVM, this instrumentation has significant overhead. Native Memory Tracking is disabled by default and cannot be enabled dynamically (since it relies on instrumentation for statistics collection - if it could be enabled dynamically, memory allocations before enabling wouldn’t be recorded, making dynamic enabling impossible). Currently, it can only be enabled through startup parameters when launching the JVM, using -XX:NativeMemoryTracking:

-XX:NativeMemoryTracking=off: This is the default value, disabling Native Memory Tracking-XX:NativeMemoryTracking=summary: Enables Native Memory Tracking, but only statistics memory usage by JVM subsystems-XX:NativeMemoryTracking=detail: Enables Native Memory Tracking, statistics memory usage from the perspective of different call paths for each JVM memory allocation. Note that enabling detail mode consumes significantly more resources than summary mode because detail mode must parse CallSites to identify call locations. We generally don’t need such detailed information unless we’re JVM developers.

After enabling, we can view Native Memory Tracking information using the jcmd command: jcmd <pid> VM.native_memory:

jcmd <pid> VM.native_memoryorjcmd <pid> VM.native_memory summary: These are equivalent, viewing Native Memory Tracking summary information. The default unit is KB, but you can specify other units, e.g.,jcmd <pid> VM.native_memory summary scale=MBjcmd <pid> VM.native_memory detail: Views Native Memory Tracking detail information, including summary information, memory usage grouped by virtual memory mappings, and memory usage grouped by different CallSite calls. Default unit is KB, but you can specify others, e.g.,jcmd <pid> VM.native_memory detail scale=MB

1.2. Using Native Memory Tracking#

For Java developers and JVM users, we only need to focus on and view Native Memory Tracking summary information. Detail information is generally for JVM developers, and we don’t need to be too concerned about it. Our subsequent analysis will only involve the summary portion of Native Memory Tracking.

Generally, we only consider enabling Native Memory Tracking when encountering problems. After identifying the issue, if we want to disable it, we can use jcmd <pid> VM.native_memory shutdown to close and clean up the instrumentation and memory used by Native Memory tracking. As mentioned earlier, we cannot dynamically enable Native Memory tracking, so once dynamically disabled, this process cannot enable it again.

jcmd provides simple comparison functionality, for example:

- Use

jcmd <pid> VM.native_memory baselineto record current memory usage information - After some time,

jcmd <pid> VM.native_memory summary.diffwill output current Native Memory Tracking summary information, and if there are differences from the baseline in step 1, the differences will be displayed in the corresponding locations

However, this tool is quite crude, and sometimes we don’t know when to call jcmd <pid> VM.native_memory summary.diff appropriately because we’re unsure when memory usage issues we want to observe will occur. So we generally implement it as continuous monitoring.

1.3. Meaning of Each Part in Native Memory Tracking Summary Information#

Here’s an example Native Memory Tracking output:

Total: reserved=10575644KB, committed=443024KB

- Java Heap (reserved=8323072KB, committed=192512KB)

(mmap: reserved=8323072KB, committed=192512KB)

- Class (reserved=1050202KB, committed=10522KB)

(classes #15409)

( instance classes #14405, array classes #1004)

(malloc=1626KB #33495)

(mmap: reserved=1048576KB, committed=8896KB)

( Metadata: )

( reserved=57344KB, committed=57216KB)

( used=56968KB)

( waste=248KB =0.43%)

( Class space:)

( reserved=1048576KB, committed=8896KB)

( used=8651KB)

( waste=245KB =2.75%)

- Thread (reserved=669351KB, committed=41775KB)

(thread #653)

(stack: reserved=667648KB, committed=40072KB)

(malloc=939KB #3932)

(arena=764KB #1304)

- Code (reserved=50742KB, committed=17786KB)

(malloc=1206KB #9495)

(mmap: reserved=49536KB, committed=16580KB)

- GC (reserved=370980KB, committed=69260KB)

(malloc=28516KB #8340)

(mmap: reserved=342464KB, committed=40744KB)

- Compiler (reserved=159KB, committed=159KB)

(malloc=29KB #813)

(arena=131KB #3)

- Internal (reserved=1373KB, committed=1373KB)

(malloc=1309KB #6135)

(mmap: reserved=64KB, committed=64KB)

- Other (reserved=12348KB, committed=12348KB)

(malloc=12348KB #14)

- Symbol (reserved=18629KB, committed=18629KB)

(malloc=16479KB #445877)

(arena=2150KB #1)

- Native Memory Tracking (reserved=8426KB, committed=8426KB)

(malloc=325KB #4777)

(tracking overhead=8102KB)

- Shared class space (reserved=12032KB, committed=12032KB)

(mmap: reserved=12032KB, committed=12032KB)

- Arena Chunk (reserved=187KB, committed=187KB)

(malloc=187KB)

- Tracing (reserved=32KB, committed=32KB)

(arena=32KB #1)

- Logging (reserved=5KB, committed=5KB)

(malloc=5KB #216)

- Arguments (reserved=31KB, committed=31KB)

(malloc=31KB #90)

- Module (reserved=403KB, committed=403KB)

(malloc=403KB #2919)

- Safepoint (reserved=8KB, committed=8KB)

(mmap: reserved=8KB, committed=8KB)

- Synchronization (reserved=56KB, committed=56KB)

(malloc=56KB #789)

- Serviceability (reserved=1KB, committed=1KB)

(malloc=1KB #18)

- Metaspace (reserved=57606KB, committed=57478KB)

(malloc=262KB #180)

(mmap: reserved=57344KB, committed=57216KB)

- String Deduplication (reserved=1KB, committed=1KB)

(malloc=1KB #8)

Let’s analyze the meaning of the above information by different subsystems:

1. Java Heap Memory - the source of memory for all Java object allocations, managed and collected by JVM GC. This will be our focus in Chapter 3:

// Heap memory usage: reserved 8323072KB, currently committed 192512KB for actual use

Java Heap (reserved=8323072KB, committed=192512KB)

// All heap memory is allocated through mmap system calls

(mmap: reserved=8323072KB, committed=192512KB)

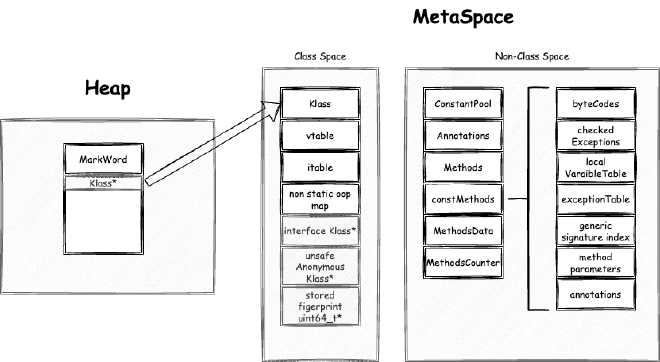

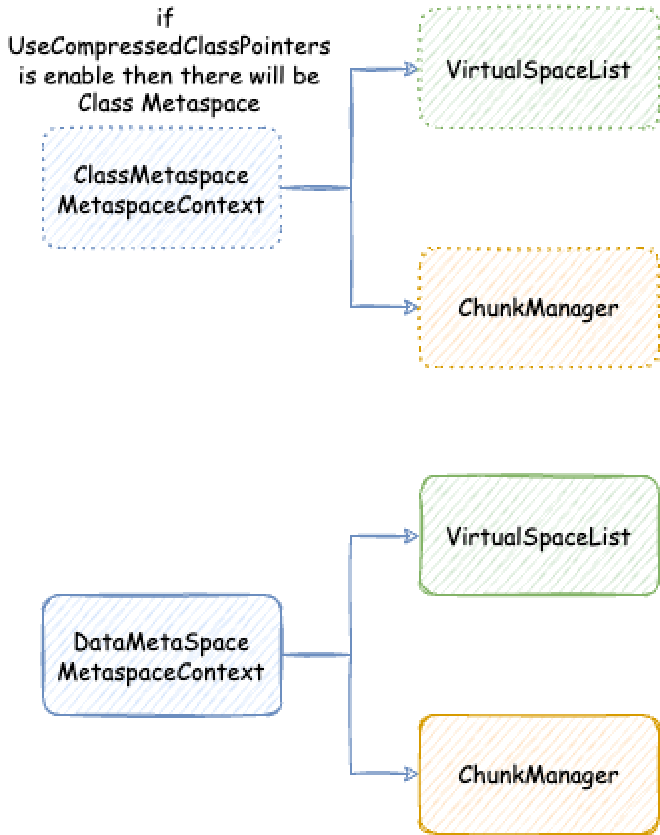

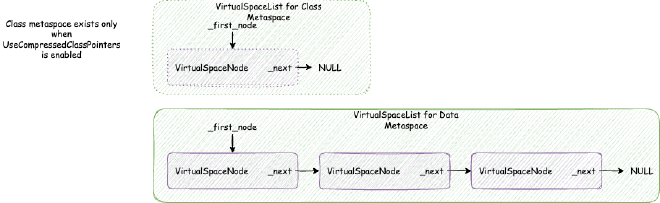

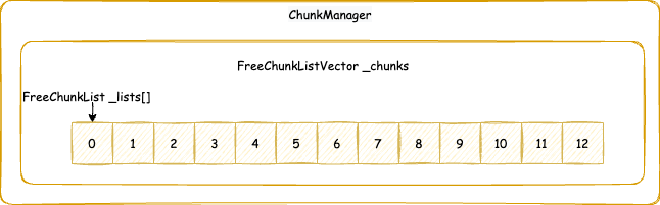

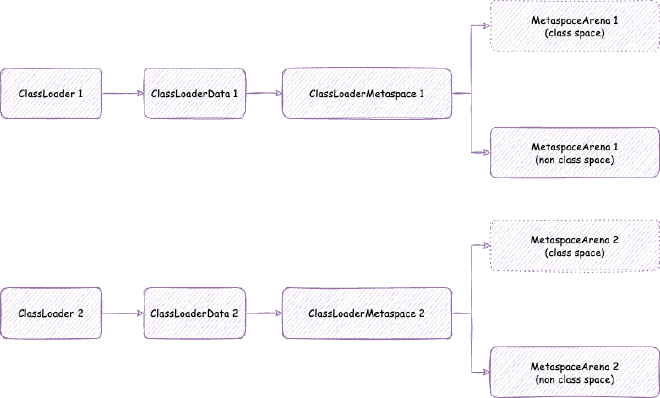

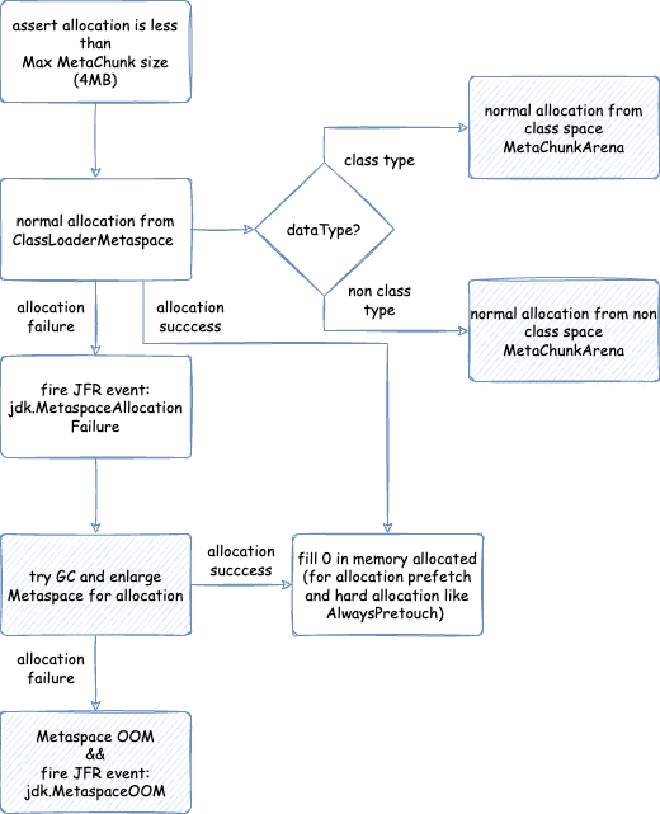

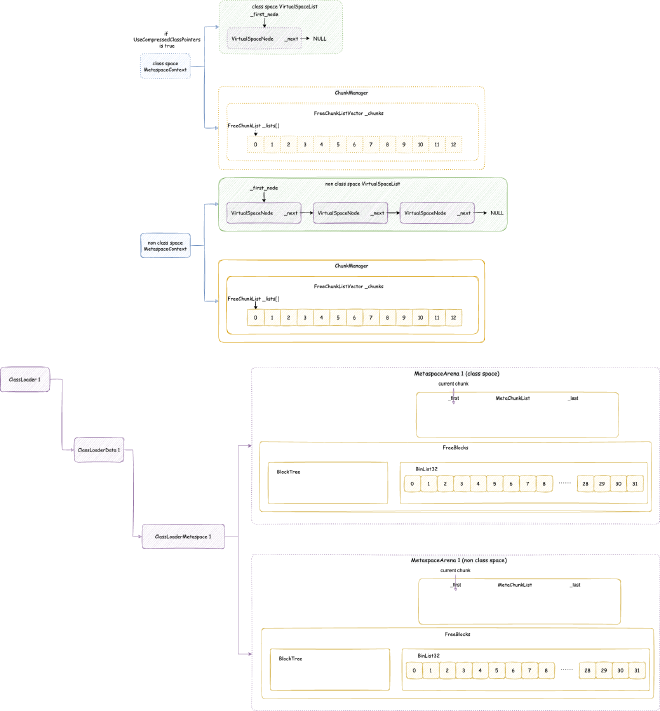

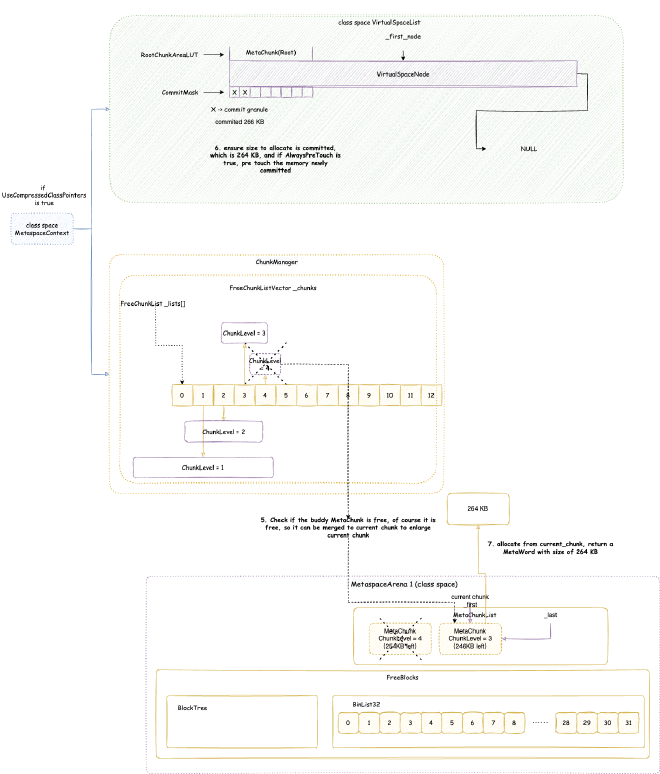

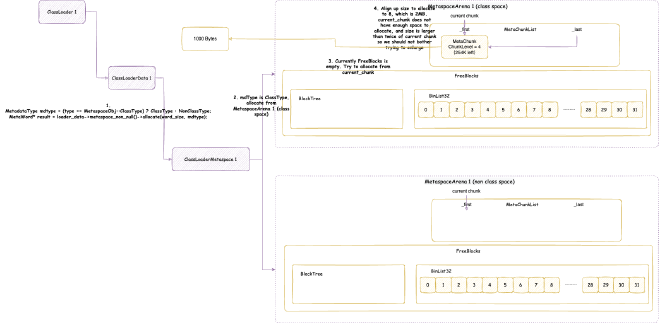

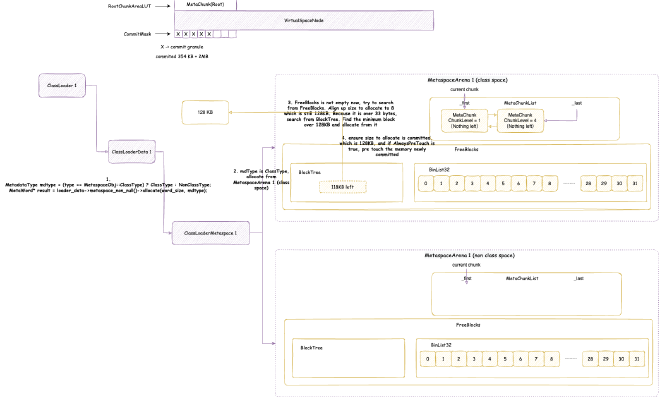

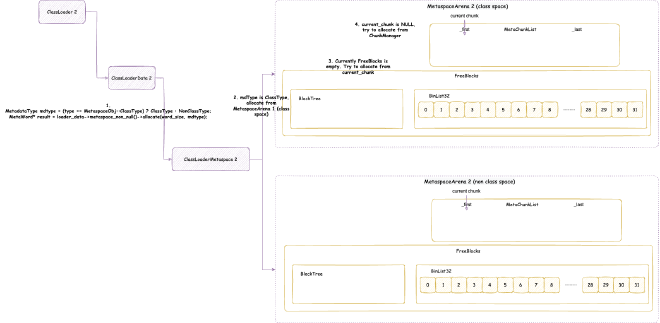

2. Metaspace - space occupied when JVM loads class files into memory for subsequent use. Note this is JVM C++ level memory usage, mainly including class files parsed by JVM into C++ Klass classes and related elements. The corresponding Java reflection Class objects are still in heap memory space:

// Class is total class metaspace usage: reserved 1050202KB, currently committed 10522KB for actual use

// Total reserved 1050202KB = mmap reserved 1048576KB + malloc 1626KB

// Total committed 10522KB = mmap committed 8896KB + malloc 1626KB

Class (reserved=1050202KB, committed=10522KB)

(classes #15409) // Total of 15409 classes loaded

( instance classes #14405, array classes #1004) // 14405 instance classes, 1004 array classes

(malloc=1626KB #33495) // Total 1626KB allocated through malloc system calls, 33495 malloc calls

(mmap: reserved=1048576KB, committed=8896KB) // Through mmap system calls: reserved 1048576KB, committed 8896KB for actual use

( Metadata: ) // Note: MetaData doesn't belong to class metaspace, belongs to data metaspace, detailed analysis in Chapter 4

( reserved=57344KB, committed=57216KB) // Data metaspace currently reserved 57344KB, committed 57216KB for actual use

( used=56968KB) // But actual usage from MetaChunk perspective is only 56968KB for actual data allocation, 248KB waste

( waste=248KB =0.43%)

( Class space:)

( reserved=1048576KB, committed=8896KB) // Class metaspace currently reserved 1048576KB, committed 8896KB for actual use

( used=8651KB) // But actual usage from MetaChunk perspective is only 8651KB for actual data allocation, 245KB waste

( waste=245KB =2.75%)

Shared class space (reserved=12032KB, committed=12032KB) // Shared class space: currently reserved 12032KB, committed 12032KB for actual use, this is actually part of Class above

(mmap: reserved=12032KB, committed=12032KB)

Module (reserved=403KB, committed=403KB) // Space for loading and recording modules: currently reserved 403KB, committed 403KB for actual use

(malloc=403KB #2919)

Metaspace (reserved=57606KB, committed=57478KB) // Equivalent to MetaChunk in Class above (excluding malloc parts): currently reserved 57606KB, committed 57478KB for actual use

(malloc=262KB #180)

(mmap: reserved=57344KB, committed=57216KB)

3. C++ String/Symbol Space - when loading classes, there are many string information (note: not Java strings, but JVM level C++ strings). String information from different classes may be duplicated. So they’re unified in the symbol table for reuse. Metaspace stores references to symbols in the symbol table. This isn’t the focus of this article, so we won’t analyze it in detail.

Symbol (reserved=18629KB, committed=18629KB)

(malloc=16479KB #445877) // Total 16479KB allocated through malloc system calls, 445877 malloc calls

(arena=2150KB #1) // Total 2150KB allocated through arena system calls, 1 arena call

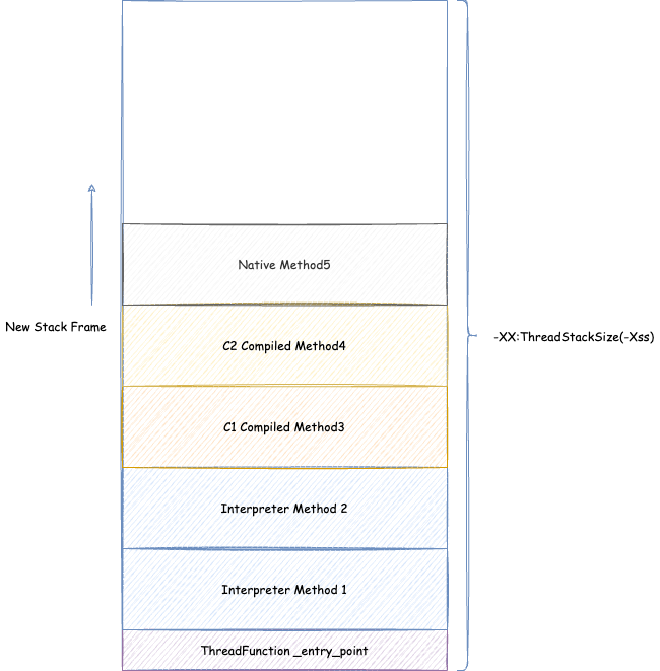

4. Thread Memory - mainly each thread’s stack. We’ll mainly analyze thread stack space (in Chapter 5). Other thread management space is very small and can be ignored.

// Total reserved 669351KB, committed 41775KB

Thread (reserved=669351KB, committed=41775KB)

(thread #653) // Current thread count is 653

(stack: reserved=667648KB, committed=40072KB) // Thread stack space: we didn't specify Xss, default is 1MB, so reserved is 653 * 1024 = 667648KB, currently committed 40072KB for actual use

(malloc=939KB #3932) // Total 939KB allocated through malloc system calls, 3932 malloc calls

(arena=764KB #1304) // Memory allocated through JVM internal Arena, total 764KB allocated, 1304 Arena allocation calls

5. JIT Compiler Space and Compiled Code Space - space occupied by the JIT compiler itself and space occupied by JIT-compiled code. This isn’t the focus of this article, so we won’t analyze it in detail.

Code (reserved=50742KB, committed=17786KB)

(malloc=1206KB #9495)

(mmap: reserved=49536KB, committed=16580KB)

Compiler (reserved=159KB, committed=159KB)

(malloc=29KB #813)

(arena=131KB #3)

6. Arena Data Structure Space - we see many arena-allocated memory in Native Memory Tracking. This is the space occupied by managing Arena data structures. This isn’t the focus of this article, so we won’t analyze it in detail.

Arena Chunk (reserved=187KB, committed=187KB)

(malloc=187KB)

7. JVM Tracing Memory - includes space occupied by JVM perf and JFR. JFR space usage might be quite large. This isn’t the focus of this article, so we won’t analyze it in detail.

Tracing (reserved=32KB, committed=32KB)

(arena=32KB #1)

8. JVM Logging Memory (logs specified by -Xlog parameter, and Java 17+ introduced asynchronous JVM logging -Xlog:async, buffers needed for asynchronous logging are also here). This isn’t the focus of this article, so we won’t analyze it in detail.

Logging (reserved=5KB, committed=5KB)

(malloc=5KB #216)

9. JVM Arguments Memory - we need to save and process current JVM parameters and various parameters passed when users start the JVM (sometimes called flags). This isn’t the focus of this article, so we won’t analyze it in detail.

Arguments (reserved=31KB, committed=31KB)

(malloc=31KB #90)

10. JVM Safepoint Memory - fixed two pages of memory (here one page is 4KB, we’ll analyze this page size related to the operating system in Chapter 2), used for JVM safepoint implementation, doesn’t change with JVM runtime memory usage. This isn’t the focus of this article, so we won’t analyze it in detail.

Safepoint (reserved=8KB, committed=8KB)

(mmap: reserved=8KB, committed=8KB)

11. Java Synchronization Mechanism Memory (e.g., synchronized, and AQS-based LockSupport) - memory occupied by underlying C++ data structures, internal system mutexes, etc. This isn’t the focus of this article, so we won’t analyze it in detail.

Synchronization (reserved=56KB, committed=56KB)

(malloc=56KB #789)

12. JVMTI Related Memory - JVMTI is the abbreviation for Java Virtual Machine Tool Interface. It’s part of the Java Virtual Machine (JVM), providing a set of APIs that allow developers to develop their own Java tools and agent programs to monitor, analyze, and debug Java applications. This memory is occupied by JVM for generating data after calling JVMTI APIs. This isn’t the focus of this article, so we won’t analyze it in detail.

Serviceability (reserved=1KB, committed=1KB)

(malloc=1KB #18)

13. Java String Deduplication Memory - Java string deduplication mechanism can reduce memory usage of string objects in applications. This mechanism has always performed poorly under certain GCs, especially G1GC and ZGC, so it’s disabled by default. Can be enabled with -XX:+UseStringDeduplication. This isn’t the focus of this article, so we won’t analyze it in detail.

String Deduplication (reserved=1KB, committed=1KB)

(malloc=1KB #8)

14. JVM GC Data Structures and Information Space - memory occupied by data structures and recorded information needed by JVM GC. This memory might be quite large, especially for low-latency focused GCs like ZGC. ZGC actually uses a space-for-time approach, increasing CPU consumption and memory usage while eliminating global pauses. This isn’t the focus of this article, so we won’t analyze it in detail.

GC (reserved=370980KB, committed=69260KB)

(malloc=28516KB #8340)

(mmap: reserved=342464KB, committed=40744KB)

15. JVM Internal and Other Usage - internal usage (usage not belonging to other categories) and other usage (not JVM itself but extra space occupied by certain OS system calls), won’t be very large.

Internal (reserved=1373KB, committed=1373KB)

(malloc=1309KB #6135)

(mmap: reserved=64KB, committed=64KB)

Other (reserved=12348KB, committed=12348KB)

(malloc=12348KB #14)

16. Native Memory Tracking Overhead - memory consumed by enabling Native Memory Tracking itself.

Native Memory Tracking (reserved=8426KB, committed=8426KB)

(malloc=325KB #4777)

(tracking overhead=8102KB)

1.4. Continuous Monitoring of Native Memory Tracking Summary Information#

Now JVM processes are generally deployed in cloud container orchestration environments like Kubernetes, where each JVM process memory is limited. If the limit is exceeded, OOMKiller will kill the JVM process. We generally only consider enabling NativeMemoryTracking to see which memory areas are consuming more and how to adjust when our JVM process is killed by OOMKiller.

OOMKiller uses a scoring system - your JVM process won’t be killed immediately upon exceeding limits, but points accumulate when exceeded, and when accumulated to a certain degree, it may be killed by OOMKiller. So we can capture the point where memory limits are exceeded for analysis by periodically outputting Native Memory Tracking summary information.

However, we cannot judge JVM memory usage solely based on Native Memory Tracking data, because as we’ll see in the analysis of JVM memory allocation and usage flow later, much of the memory allocated by JVM through mmap is first reserved, then committed, and only when actual data is written does it truly allocate physical memory. Also, JVM dynamically releases some memory, which may not be immediately reclaimed by the OS. Native Memory Tracking represents memory that JVM thinks it has requested from the OS, which differs from actual OS-allocated memory. So we can’t judge solely by viewing Native Memory Tracking; we also need to check indicators that reflect actual memory usage. Here we can check the Linux process monitoring file smaps_rollup to see specific memory usage, for example (generally don’t look at Rss because it can be inaccurate when multiple virtual addresses map to the same physical address, so mainly focus on Pss, but Pss updates aren’t real-time, but close enough - this can be understood as actual physical memory occupied by the process):

> cat /proc/23/smaps_rollup

689000000-fffff53a9000 ---p 00000000 00:00 0 [rollup]

Rss: 5870852 kB

Pss: 5849120 kB

Pss_Anon: 5842756 kB

Pss_File: 6364 kB

Pss_Shmem: 0 kB

Shared_Clean: 27556 kB

Shared_Dirty: 0 kB

Private_Clean: 524 kB

Private_Dirty: 5842772 kB

Referenced: 5870148 kB

Anonymous: 5842756 kB

LazyFree: 0 kB

AnonHugePages: 0 kB

ShmemPmdMapped: 0 kB

FilePmdMapped: 0 kB

Shared_Hugetlb: 0 kB

Private_Hugetlb: 0 kB

Swap: 0 kB

SwapPss: 0 kB

Locked: 0 kB

The author implements timed process memory monitoring by adding the following code to each Spring Cloud microservice process, mainly using smaps_rollup to view actual physical memory usage to find memory limit exceeded time points, and Native Memory Tracking to view JVM memory area usage for parameter optimization guidance.

import lombok.extern.log4j.Log4j2;

import org.apache.commons.io.FileUtils;

import org.springframework.boot.context.event.ApplicationReadyEvent;

import org.springframework.context.ApplicationListener;

import java.io.BufferedReader;

import java.io.File;

import java.io.IOException;

import java.io.InputStreamReader;

import java.util.List;

import java.util.concurrent.ScheduledThreadPoolExecutor;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicBoolean;

import java.util.stream.Collectors;

import static org.springframework.cloud.bootstrap.BootstrapApplicationListener.BOOTSTRAP_PROPERTY_SOURCE_NAME;

@Log4j2

public class MonitorMemoryRSS implements ApplicationListener<ApplicationReadyEvent> {

private static final AtomicBoolean INITIALIZED = new AtomicBoolean(false);

private static final ScheduledThreadPoolExecutor sc = new ScheduledThreadPoolExecutor(1);

@Override

public void onApplicationEvent(ApplicationReadyEvent event) {

if (isBootstrapContext(event)) {

return;

}

synchronized (INITIALIZED) {

if (INITIALIZED.get()) {

return;

}

sc.scheduleAtFixedRate(() -> {

long pid = ProcessHandle.current().pid();

try {

// Read smaps_rollup

List<String> strings = FileUtils.readLines(new File("/proc/" + pid + "/smaps_rollup"));

log.info("MonitorMemoryRSS, smaps_rollup: {}", strings.stream().collect(Collectors.joining("\n")));

// Read Native Memory Tracking information

Process process = Runtime.getRuntime().exec(new String[]{"jcmd", pid + "", "VM.native_memory"});

try (BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()))) {

log.info("MonitorMemoryRSS, native_memory: {}", reader.lines().collect(Collectors.joining("\n")));

}

} catch (IOException e) {

}

}, 0, 30, TimeUnit.SECONDS);

INITIALIZED.set(true);

}

}

static boolean isBootstrapContext(ApplicationReadyEvent applicationEvent) {

return applicationEvent.getApplicationContext().getEnvironment().getPropertySources().contains(BOOTSTRAP_PROPERTY_SOURCE_NAME);

}

}

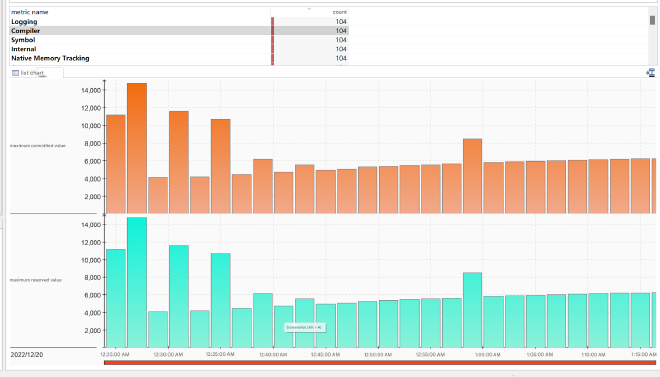

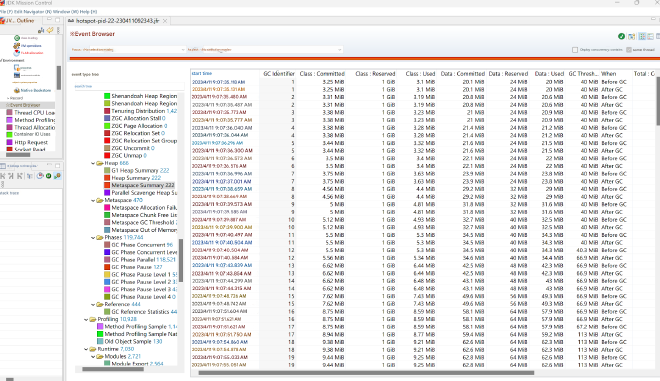

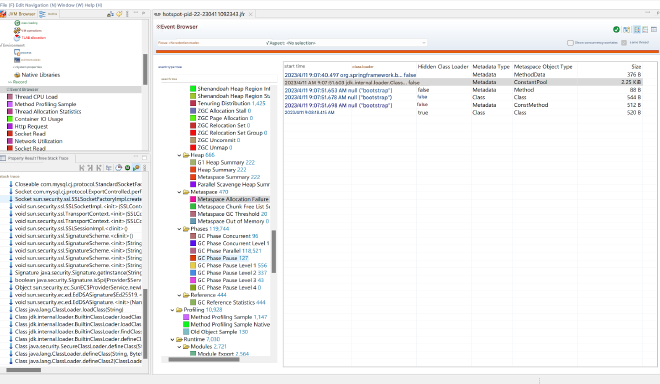

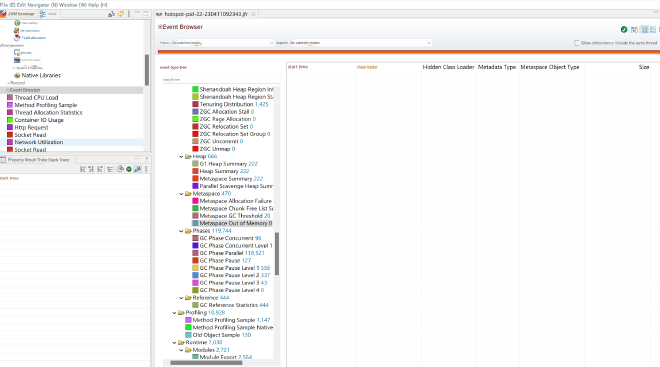

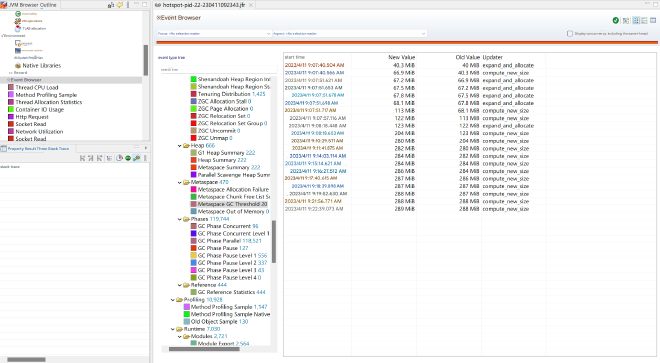

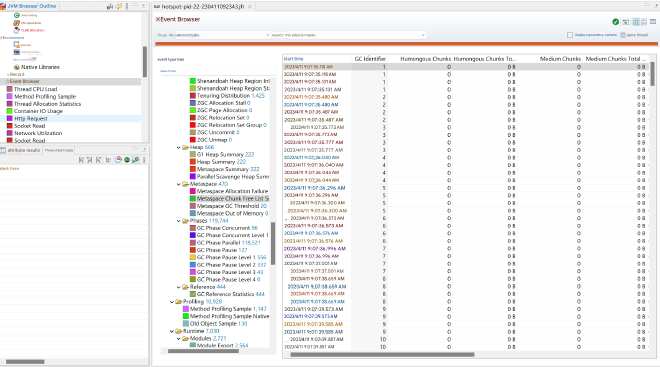

Additionally, the author abstracts these outputs as JFR events, with the effect shown in the image.

1.5. Why Memory Allocated in Native Memory Tracking is Divided into Reserved and Committed#

This will be analyzed in detail in Chapter 2.

2. JVM Memory Allocation and Usage Flow#

2.1. Brief Description of Linux Memory Management Model#

Linux memory management model isn’t the main focus of our series discussion. We’ll only briefly mention what we need to understand for this series.

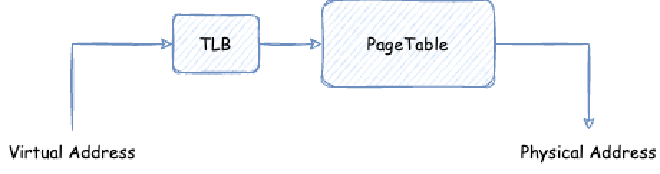

CPUs access memory through addressing. Most current CPUs are 64-bit, meaning the addressing range is: 0x0000 0000 0000 0000 ~ 0xFFFF FFFF FFFF FFFF, capable of managing 16EB of memory. However, programs don’t directly access actual physical memory through CPU addressing, but through introducing MMU (Memory Management Unit) with an abstraction layer of virtual memory between CPU and actual physical addresses. This way, programs apply for and access virtual memory addresses, and MMU maps these virtual memory addresses to actual physical memory addresses. Also, to reduce memory fragmentation and increase memory allocation efficiency, Linux abstracts the concept of memory paging based on MMU, dividing virtual addresses into fixed-size pages (default 4K, if the platform supports more and larger page sizes, JVM can also utilize them, as we’ll see when analyzing related JVM parameters later), and when pages are actually used for writing data, mapping same-sized actual physical memory (page frames), or transferring some less frequently used pages to other storage devices like disks when physical memory is insufficient.

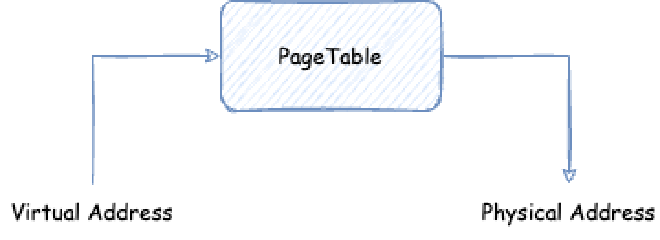

Generally, there are multiple processes using memory in a system, each process has its own independent virtual memory space. Assuming we have three processes here, process A’s accessed virtual address can be the same as process B and process C’s virtual addresses, so how does the OS distinguish them? How does the OS convert these virtual addresses to physical memory? This requires page tables. Page tables are also independent for each process. The OS will save physical memory in the process’s page table when mapping physical memory for processes to save user data. Then, when processes access virtual memory space, they find physical memory through page tables:

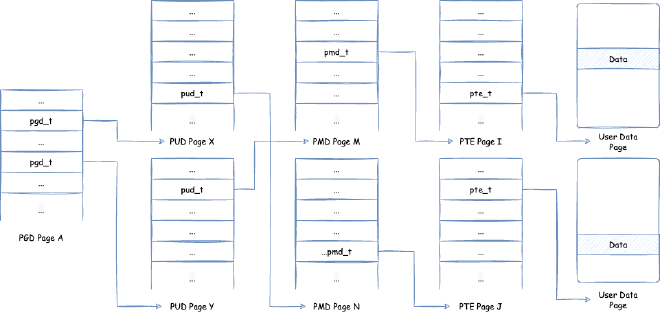

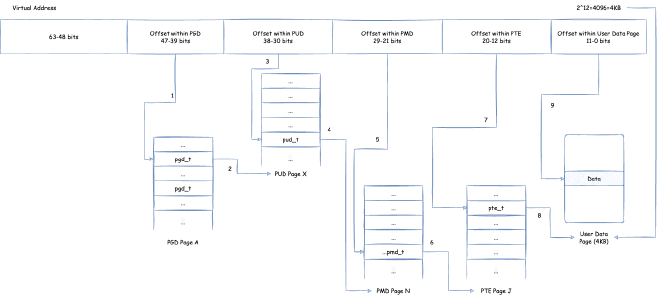

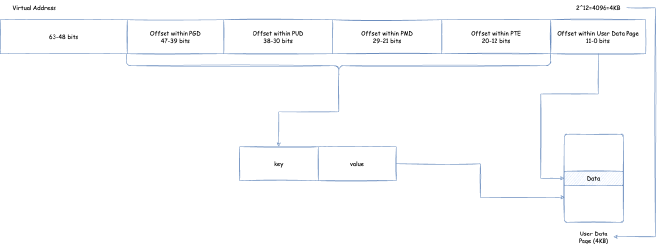

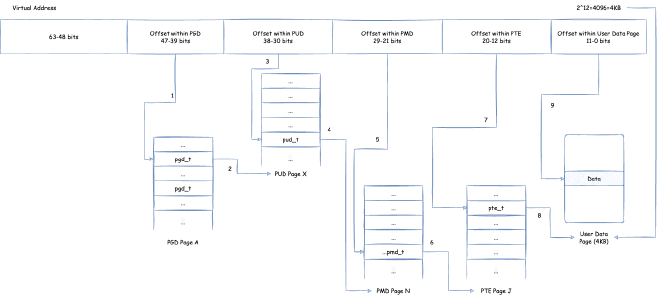

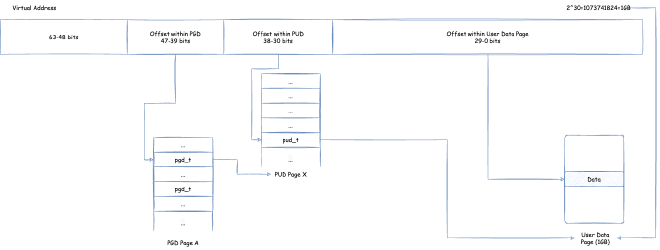

How do page tables convert a virtual memory address (we need to note that currently virtual memory addresses, user space and kernel space can use addresses from 0x0000 0000 0000 0000 ~ 0x0000 FFFF FFFF FFFF, i.e., 256TB) to physical memory? Below we show a four-level page table structure view in x86, 64-bit environment:

Here, page tables are divided into four levels: PGD (Page Global Directory), PUD (Page Upper Directory), PMD (Page Middle Directory), PTE (Page Table Entry). Each page table contains page table entries that save references to the next level page table, except the last level PTE contains page table entries that save pointers to user data memory. The process of finding corresponding user data memory through page tables using virtual memory addresses to read data is:

- Take bits

39 ~ 47of the virtual address (because user space and kernel space can use addresses from0x0000 0000 0000 0000 ~ 0x0000 FFFF FFFF FFFF, i.e., addresses below 47 bits) as offset, locate PGD page table entrypgd_tin the unique PGD page based on offset - Use

pgd_tto locate the specific PUD page - Take bits

30 ~ 38of the virtual address as offset, locate PUD page table entrypud_tin the corresponding PUD page based on offset - Use

pud_tto locate the specific PMD page - Take bits

21 ~ 29of the virtual address as offset, locate PMD page table entrypmd_tin the corresponding PMD page based on offset - Use

pmd_tto locate the specific PTE page - Take bits

12 ~ 20of the virtual address as offset, locate PTE page table entrypte_tin the corresponding PTE page based on offset - Use

pte_tto locate the specific user data physical memory page - Use the final bits

0 ~ 11as offset, corresponding to the corresponding offset in the user data physical memory page

If every virtual memory access requires accessing this page table to translate to actual physical memory, performance would be too poor. So generally CPUs have a TLB (Translation Lookaside Buffer) inside, usually part of the CPU’s MMU. TLB is responsible for caching the mapping relationship between virtual memory and actual physical memory, and TLB capacity is generally very small. Every virtual memory access first checks if there’s a cache in TLB, and only queries the page table if there isn’t.

By default, TLB caches with key as bits 12 ~ 47 of the address, value is the actual physical memory page. This way steps 1 to 7 above can be replaced with accessing TLB:

- Take bits

12 ~ 47of the virtual address as key, access TLB, locate the specific user data physical memory page. - Use the final bits

0 ~ 11as offset, corresponding to the corresponding offset in the user data physical memory page.

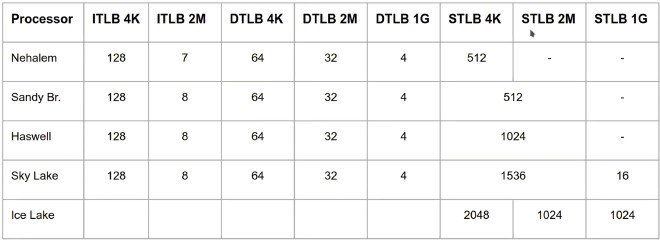

TLB is generally very small. Let’s look at TLB sizes in several CPUs.

We don’t need to care about what iTLB, dTLB, sTLB mean specifically, just see two points: 1. TLB can overall accommodate a small number; 2. The larger the page size, the fewer TLB can accommodate. But overall, the page size TLB can accommodate still increases (for example, Nehalem’s iTLB, when page size is 4K, can accommodate a total of 128 * 4 = 512K memory, when page size is 2M, can accommodate a total of 2 * 7 = 14M memory).

JVM needs to know page size in many places. During JVM initialization, it reads the page size through system call sysconf(_SC_PAGESIZE) and saves it for subsequent use. Reference source code: https://github.com/openjdk/jdk/blob/jdk-21%2B3/src/hotspot/os/linux/os_linux.cpp:

// Set global default page size, can get global default page size through Linux::page_size()

Linux::set_page_size(sysconf(_SC_PAGESIZE));

if (Linux::page_size() == -1) {

fatal("os_linux.cpp: os::init: sysconf failed (%s)",

os::strerror(errno));

}

// Add default page size to optional page size list, useful when involving large page allocation

_page_sizes.add(Linux::page_size());

2.2. JVM Main Memory Allocation Process#

Step 1: Each JVM subsystem (such as Java heap, metaspace, JIT code cache, GC, etc.), if needed, first reserves the maximum limit size of the memory area to be allocated during initialization (this maximum size needs to be aligned to page size (i.e., an integer multiple of page size), default page size is the aforementioned Linux::page_size()). For example, for Java heap, it’s the maximum heap size (limited by -Xmx or -XX:MaxHeapSize), and for code cache, it’s also the maximum code cache size (limited by -XX:ReservedCodeCacheSize). The purpose of Reserve is to allocate a block of memory in virtual memory space specifically for a certain area. The benefits of doing this are:

- Isolate the virtual space of memory used by each JVM subsystem, so when there are bugs in JVM code (such as Segment Fault exceptions), the problematic subsystem can be quickly located through the virtual memory address in the error report.

- Can conveniently limit the maximum memory size used by this area.

- Easy to manage. Reserve doesn’t trigger the OS to allocate mapped actual physical memory, this area can expand and contract as needed within the Reserved area.

- Convenient for some JIT optimizations, for example, we deliberately reserve this area but deliberately don’t map the virtual memory of this area to physical memory, accessing this memory will cause Segment Fault exceptions. JVM will preset Segment Fault exception handlers, in the handler check which subsystem’s Reserved area the memory address that caused the Segment Fault exception belongs to, and determine what operation to do. Later we’ll see that null check optimization throwing

NullPointerExceptionexceptions, global safepoints, and throwingStackOverflowErrorimplementations are all related to this mechanism.

In Linux environment, Reserve is implemented through mmap(2) system call, passing parameter prot = PROT_NONE. PROT_NONE means it won’t be used, i.e., no operations including read and write are allowed. If JVM uses this memory, a Segment Fault exception will occur. The source code for Reserve corresponds to:

Entry point: https://github.com/openjdk/jdk/blob/jdk-21+9/src/hotspot/share/runtime/os.cpp

char* os::reserve_memory(size_t bytes, bool executable, MEMFLAGS flags) {

// Call different pd_reserve_memory functions for each operating system to perform reserve

char* result = pd_reserve_memory(bytes, executable);

if (result != NULL) {

MemTracker::record_virtual_memory_reserve(result, bytes, CALLER_PC, flags);

}

return result;

}

The Linux implementation corresponds to: https://github.com/openjdk/jdk/blob/jdk-21+9/src/hotspot/os/linux/os_linux.cpp

char* os::pd_reserve_memory(size_t bytes, bool exec) {

return anon_mmap(nullptr, bytes);

}

static char* anon_mmap(char* requested_addr, size_t bytes) {

const int flags = MAP_PRIVATE | MAP_NORESERVE | MAP_ANONYMOUS;

// The key here is PROT_NONE, representing only reservation in virtual space, not actually mapping physical memory

// fd passed is -1, because there's no actual file mapping, our purpose here is to allocate memory, not map a file to memory

char* addr = (char*)::mmap(requested_addr, bytes, PROT_NONE, flags, -1, 0);

return addr == MAP_FAILED ? NULL : addr;

}

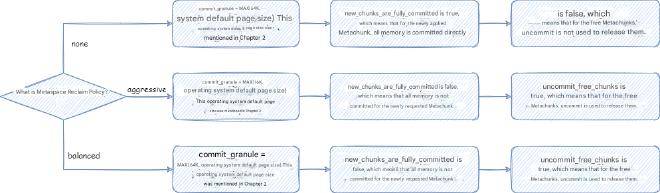

Step 2: Each JVM subsystem, according to their respective strategies, extends memory by Committing part of the Reserved area from Step 1 (size also generally page-size aligned) to request physical memory mapping from the OS, and releases physical memory to the OS by Uncommitting already Committed memory.

The source code entry for Commit: https://github.com/openjdk/jdk/blob/jdk-21+9/src/hotspot/share/runtime/os.cpp

bool os::commit_memory(char* addr, size_t bytes, bool executable) {

assert_nonempty_range(addr, bytes);

// Call different pd_commit_memory functions for each operating system to perform commit

bool res = pd_commit_memory(addr, bytes, executable);

if (res) {

MemTracker::record_virtual_memory_commit((address)addr, bytes, CALLER_PC);

}

return res;

}

The Linux implementation corresponds to: https://github.com/openjdk/jdk/blob/jdk-21+9/src/hotspot/os/linux/os_linux.cpp

bool os::pd_commit_memory(char* addr, size_t size, bool exec) {

return os::Linux::commit_memory_impl(addr, size, exec) == 0;

}

int os::Linux::commit_memory_impl(char* addr, size_t size, bool exec) {

// The key here is PROT_READ|PROT_WRITE, i.e., requesting to read and write this memory

int prot = exec ? PROT_READ|PROT_WRITE|PROT_EXEC : PROT_READ|PROT_WRITE;

uintptr_t res = (uintptr_t) ::mmap(addr, size, prot,

MAP_PRIVATE|MAP_FIXED|MAP_ANONYMOUS, -1, 0);

if (res != (uintptr_t) MAP_FAILED) {

if (UseNUMAInterleaving) {

numa_make_global(addr, size);

}

return 0;

}

int err = errno; // save errno from mmap() call above

if (!recoverable_mmap_error(err)) {

warn_fail_commit_memory(addr, size, exec, err);

vm_exit_out_of_memory(size, OOM_MMAP_ERROR, "committing reserved memory.");

}

return err;

}

After Committing memory, the OS doesn’t immediately allocate physical memory, but only allocates memory when writing data to the Committed memory. JVM has a corresponding parameter that can immediately write 0 after Committing memory to force the OS to allocate memory, which is the AlwaysPreTouch parameter. We’ll analyze this parameter in detail later, including defects in historical versions.

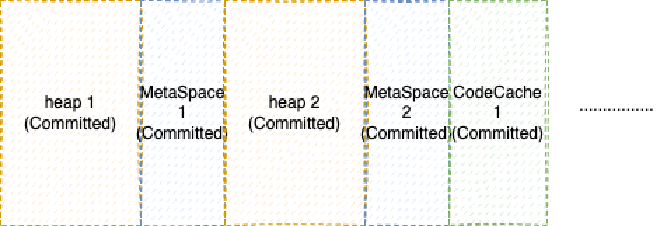

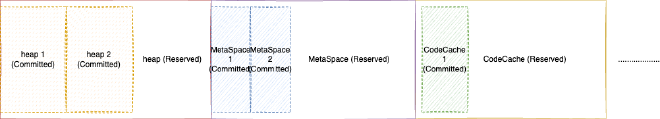

Let’s see why Reserve first then Commit is good for debugging. Look at this example: if we don’t have Step 1 Reserve and directly do Step 2 Commit, then we might allocate memory like this:

Suppose at this time, we accidentally wrote a bug in JVM that caused MetaSpace 2 memory to be reclaimed, then pointers pointing to MetaSpace 2 memory will report Segment Fault. But through the address in Segment Fault, we don’t know which area this address belongs to, unless we have another memory structure saving a list of memory Committed by each subsystem, but this is too inefficient. If we Reserve large blocks first then Commit inside them, the situation is different:

This way, just by judging the range where the address in Segment Fault is located, we can know which subsystem it is.

2.2.1. Difference Between JVM Committed Memory and Actual Occupied Memory#

In the previous section, we learned that large blocks of memory in JVM are basically first reserved in large chunks, then commit the needed small chunks, then start reading and writing to process memory. In Linux environment, this is implemented based on mmap(2). But note that after committing, memory isn’t immediately allocated physical memory, but only when actually storing things in memory does it truly map physical memory. Loading reads might also not map physical memory.

This is actually a phenomenon you might see but ignore in daily life. If you’re using SerialGC, ParallelGC, or CMS GC, old generation memory might not map physical memory before objects are promoted to old generation, although this memory has been committed. And young generation might also map physical memory as it’s used. If you’re using ZGC, G1GC, or ShenandoahGC, memory usage will be more aggressive (mainly due to memory being written due to partitioning algorithm division), this is one of the reasons you see rapid physical memory growth after switching GCs. JVM has a corresponding parameter that can immediately write 0 after Committing memory to force the OS to allocate memory, which is the AlwaysPreTouch parameter. We’ll analyze this parameter in detail later, including defects in historical versions. Other differences mainly come from the system possibly not having time to truly reclaim this physical memory after uncommitting.

So, memory that JVM thinks it has committed and actual physical memory allocated by the system might differ - JVM might think it has committed more memory than the system has allocated physical memory, or it might be less. This is why Native Memory Tracking (memory JVM thinks it has committed) doesn’t match actual physical memory usage indicators in other system monitoring.

2.3. Large Page Allocation UseLargePages#

We mentioned earlier that virtual memory needs to map physical memory to be usable, and this mapping relationship is saved in page tables in memory. Modern CPU architectures generally have TLB (Translation Lookaside Buffer, also called page table register buffer) that saves frequently used page table mapping entries. TLB size is limited, generally TLB can only accommodate less than 100 page table mapping entries. If we can keep all page table mapping entries corresponding to program virtual memory in TLB, it can greatly improve program performance. This requires minimizing the number of page table mapping entries: Number of page table entries = Program required memory size / Page size. We either reduce program required memory or increase page size. We generally consider increasing page size, which is the origin of large page allocation. JVM also supports large page allocation for heap memory allocation to optimize large heap memory allocation. So what large page allocation methods are available in Linux environment?

2.3.1. Linux Large Page Allocation Method - Huge Translation Lookaside Buffer Page (hugetlbfs)#

Related Linux kernel documentation: https://www.kernel.org/doc/Documentation/vm/hugetlbpage.txt

This is an earlier large page allocation method, essentially working on the page table mapping mentioned earlier:

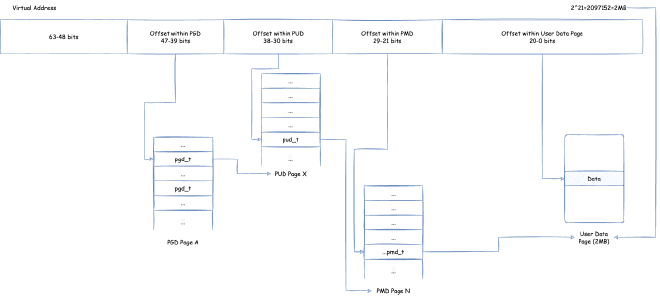

Default 4K page size:

PMD directly maps actual physical pages, page size is 4K * 2^9 = 2M:

PUD directly maps actual physical pages, page size is 2M * 2^9 = 1G:

However, to use this feature, the OS needs to enable CONFIG_HUGETLBFS and CONFIG_HUGETLB_PAGE when building. After that, large pages are usually pre-allocated by system management control and put into a pool. Then, large page allocation can be used to request memory from the pool through mmap system calls or shmget,shmat SysV shared memory system calls.

This large page allocation method requires the system to preset enabling large pages and pre-allocate large pages. It also has some invasiveness to code, lacking flexibility. But the benefit is more controllable performance. Another very flexible Transparent Huge Pages (THP) method can always have some unexpected performance situations.

2.3.2. Linux Large Page Allocation Method - Transparent Huge Pages (THP)#

Related Linux kernel documentation: https://www.kernel.org/doc/Documentation/vm/transhuge.txt

THP is a second method of using large pages that supports automatic page size upgrade and downgrade, making it very flexible with basically no invasiveness to user code. But as mentioned earlier, this system’s automatic page size upgrade and downgrade, and the system’s general consideration of versatility, can lead to unexpected performance bottlenecks in certain situations.

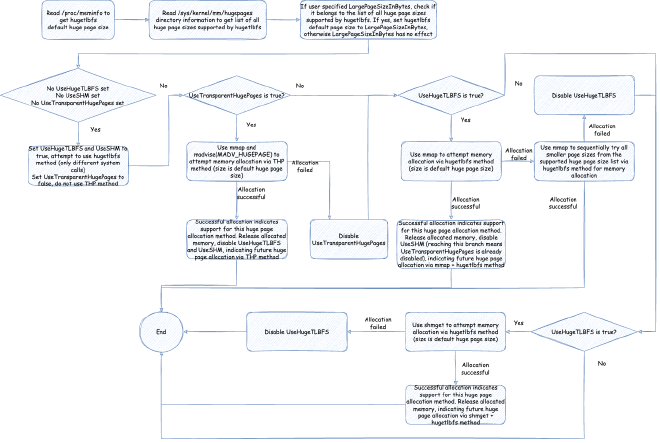

2.3.3. JVM Large Page Allocation Related Parameters and Mechanisms#

Related parameters are as follows:

UseLargePages: Explicitly specifies whether to enable large page allocation. If disabled, the parameters below won’t take effect. Default is false on Linux.UseHugeTLBFS: Explicitly specifies whether to use the first large page allocation method hugetlbfs and allocate memory throughmmapsystem calls. Default is false on Linux.UseSHM: Explicitly specifies whether to use the first large page allocation method hugetlbfs and allocate memory throughshmget,shmatsystem calls. Default is false on Linux.UseTransparentHugePages: Explicitly specifies whether to use the second large page allocation method THP. Default is false on Linux.LargePageSizeInBytes: Specifies explicit large page size, only applicable to the first large page allocation method hugetlbfs, and must belong to OS-supported page sizes or won’t take effect. Default is 0, meaning not specified.

First, we need to make a simple judgment on the above parameters: if UseLargePages isn’t specified, then use the corresponding system’s default UseLargePages value. On Linux it’s false, so large page allocation won’t be enabled. If startup parameters explicitly specify UseLargePages not to enable, then large page allocation also won’t be enabled. If reading /proc/meminfo to get default large page size can’t be read or is 0, it means the system doesn’t support large page allocation, and large page allocation also won’t be enabled.

So if large page allocation is enabled, we need to initialize and verify the feasibility of large page allocation parameters. The process is:

First, JVM will read supported page sizes according to the current platform and system environment. Of course, this is for the first large page allocation method hugetlbfs. In Linux environment, JVM will read the default Hugepagesize from /proc/meminfo, and retrieve all supported large page sizes from the /sys/kernel/mm/hugepages directory. This can be referenced in source code: https://github.com/openjdk/jdk/blob/jdk-21%2B3/src/hotspot/os/linux/os_linux.cpp. For detailed information about these files or directories, please refer to the Linux kernel documentation mentioned in previous sections: https://www.kernel.org/doc/Documentation/vm/hugetlbpage.txt

If the OS has enabled hugetlbfs, the structure under /sys/kernel/mm/hugepages directory is similar to:

> tree /sys/kernel/mm/hugepages

/sys/kernel/mm/hugepages

├── hugepages-1048576kB

│ ├── free_hugepages

│ ├── nr_hugepages

│ ├── nr_hugepages_mempolicy

│ ├── nr_overcommit_hugepages

│ ├── resv_hugepages

│ └── surplus_hugepages

└── hugepages-2048kB

├── free_hugepages

├── nr_hugepages

├── nr_hugepages_mempolicy

├── nr_overcommit_hugepages

├── resv_hugepages

└── surplus_hugepages

This hugepages-1048576kB represents support for 1GB page size, hugepages-2048kB represents support for 2KB page size.

If UseHugeTLBFS, UseSHM, and UseTransparentHugePages aren’t set, it actually follows the default, which defaults to using hugetlbfs method, not THP method, because as mentioned earlier, THP can have unexpected performance bottlenecks in certain scenarios, and in large applications, stability takes priority over peak performance. After that, it defaults to trying UseHugeTLBFS first (i.e., using mmap system calls through hugetlbfs method for large page allocation), then trying UseSHM if that doesn’t work (i.e., using shmget system calls through hugetlbfs method for large page allocation). This just verifies whether these large page memory allocation methods are available; only when available will those usable large page memory allocation methods be adopted when actually allocating memory later.

3. Java Heap Memory Related Design#

3.1. General Initialization and Extension Process#

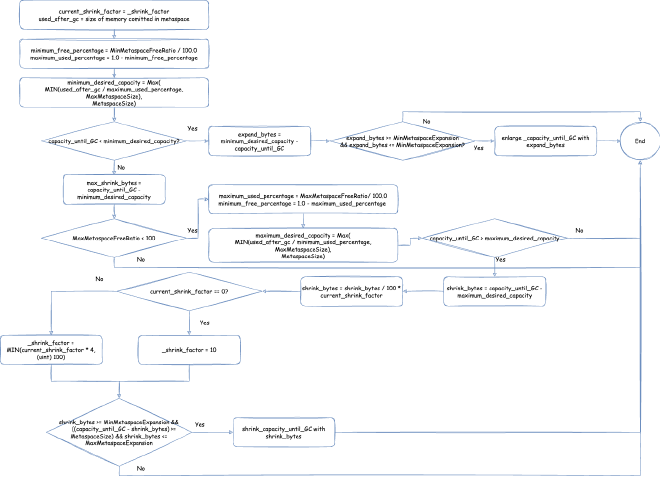

Currently, the latest JVM mainly initializes the heap and extends or shrinks the heap based on three indicators:

- Maximum heap size

- Minimum heap size

- Initial heap size

Under different GC situations, the initialization and extension processes might differ in some details, but the general approach is:

- During initialization phase, reserve maximum heap size and commit initial heap size

- During certain phases of certain GCs, dynamically extend or shrink heap size based on data from the last GC. Extension means committing more, shrinking means uncommitting part of the memory. However, heap size won’t be smaller than minimum heap size and won’t be larger than maximum heap size

3.2. Direct Specification of Three Indicators (MinHeapSize, MaxHeapSize, InitialHeapSize)#

These three indicators directly correspond to JVM parameters:

- Maximum heap size:

MaxHeapSize, if not specified there will be a default preset value to guide JVM calculation of these indicator sizes. The next section will analyze in detail, preset value is around 125MB (96M*13/10) - Minimum heap size:

MinHeapSize, default is 0, 0 means let JVM calculate itself, next section will analyze in detail - Initial heap size:

InitialHeapSize, default is 0, 0 means let JVM calculate itself, next section will analyze in detail

Corresponding source code: https://github.com/openjdk/jdk/blob/jdk-21+3/src/hotspot/share/gc/shared/gc_globals.hpp:

#define ScaleForWordSize(x) align_down((x) * 13 / 10, HeapWordSize)

product(size_t, MaxHeapSize, ScaleForWordSize(96*M), \

"Maximum heap size (in bytes)") \

constraint(MaxHeapSizeConstraintFunc,AfterErgo) \

product(size_t, MinHeapSize, 0, \

"Minimum heap size (in bytes); zero means use ergonomics") \

constraint(MinHeapSizeConstraintFunc,AfterErgo) \

product(size_t, InitialHeapSize, 0, \

"Initial heap size (in bytes); zero means use ergonomics") \

constraint(InitialHeapSizeConstraintFunc,AfterErgo) \

We can set these three indicators through startup parameters like -XX:MaxHeapSize=1G, but we often see Xmx and Xms parameters for setting these three indicators. These two parameters correspond to:

Xmx: Corresponds to maximum heap size, equivalent toMaxHeapSizeXms: Equivalent to setting both minimum heap sizeMinHeapSizeand initial heap sizeInitialHeapSize

Corresponding JVM source code: https://github.com/openjdk/jdk/blob/jdk-21+3/src/hotspot/share/runtime/arguments.cpp:

//If Xms is set

else if (match_option(option, "-Xms", &tail)) {

julong size = 0;

//Parse Xms size

ArgsRange errcode = parse_memory_size(tail, &size, 0);

if (errcode != arg_in_range) {

jio_fprintf(defaultStream::error_stream(),

"Invalid initial heap size: %s\n", option->optionString);

describe_range_error(errcode);

return JNI_EINVAL;

}

//Set parsed value to MinHeapSize

if (FLAG_SET_CMDLINE(MinHeapSize, (size_t)size) != JVMFlag::SUCCESS) {

return JNI_EINVAL;

}

//Set parsed value to InitialHeapSize

if (FLAG_SET_CMDLINE(InitialHeapSize, (size_t)size) != JVMFlag::SUCCESS) {

return JNI_EINVAL;

}

//If Xmx is set

} else if (match_option(option, "-Xmx", &tail) || match_option(option, "-XX:MaxHeapSize=", &tail)) {

julong long_max_heap_size = 0;

//Parse Xmx size

ArgsRange errcode = parse_memory_size(tail, &long_max_heap_size, 1);

if (errcode != arg_in_range) {

jio_fprintf(defaultStream::error_stream(),

"Invalid maximum heap size: %s\n", option->optionString);

describe_range_error(errcode);

return JNI_EINVAL;

}

//Set parsed value to MaxHeapSize

if (FLAG_SET_CMDLINE(MaxHeapSize, (size_t)long_max_heap_size) != JVMFlag::SUCCESS) {

return JNI_EINVAL;

}

}

Finally, JVM startup parameters can appear multiple times for the same parameter, but only the last one takes effect, for example:

java -XX:MaxHeapSize=8G -XX:MaxHeapSize=4G -XX:MaxHeapSize=8M -version

This command starts JVM with MaxHeapSize of 8MB. Since Xmx is equivalent to MaxHeapSize as mentioned earlier, this can also be written (though MaxHeapSize is still 8MB in the end):

java -Xmx=8G -XX:MaxHeapSize=4G -XX:MaxHeapSize=8M -version

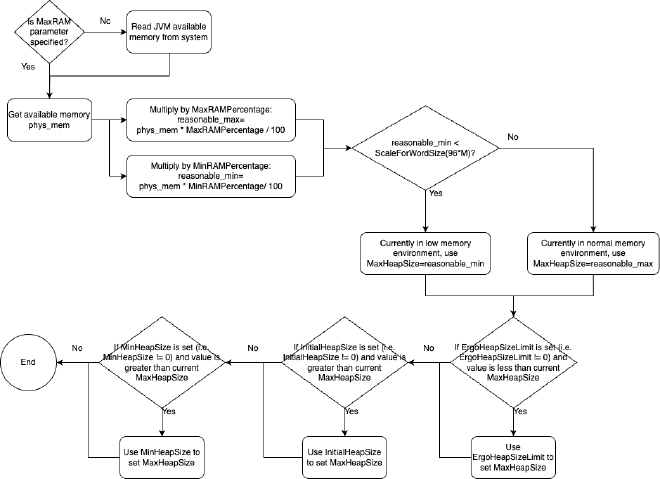

3.3. How These Three Indicators (MinHeapSize, MaxHeapSize, InitialHeapSize) are Calculated When Not Manually Specified#

In the previous section, we mentioned we can manually specify these three parameters. What if we don’t specify them? How does JVM calculate the size of these three indicators?

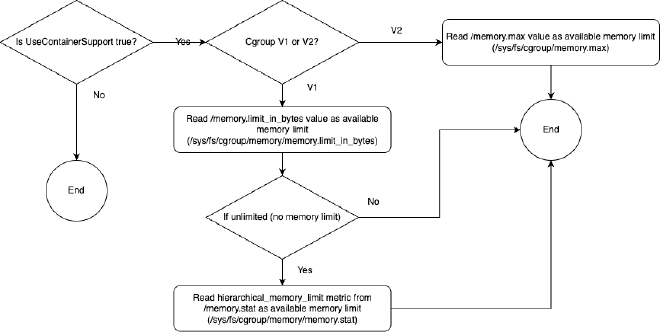

First, of course, JVM needs to read JVM available memory: First, JVM needs to know how much memory it can use, which we call available memory. This introduces the first JVM parameter, MaxRAM, which is used to explicitly specify the available memory size for the JVM process. If not specified, JVM will read the system available memory itself. This available memory is used to guide JVM in limiting maximum heap memory. Later we’ll see many JVM parameters related to this available memory.

We mentioned earlier that even if MaxHeapSize or Xmx isn’t specified, MaxHeapSize has its own preset reference value. In source code, this preset reference value is around 125MB (96M*13/10). But generally the final value won’t be based on this reference value, JVM has very complex calculations during initialization to calculate appropriate values. For example, you can execute the following command on your computer and see output similar to below:

> java -XX:+UnlockDiagnosticVMOptions -XX:+PrintFlagsFinal -version|grep MaxHeapSize

size_t MaxHeapSize = 1572864000 {product} {ergonomic}

size_t SoftMaxHeapSize = 1572864000 {manageable} {ergonomic}

openjdk version "17.0.2" 2022-01-18 LTS

OpenJDK Runtime Environment Corretto-17.0.2.8.1 (build 17.0.2+8-LTS)

OpenJDK 64-Bit Server VM Corretto-17.0.2.8.1 (build 17.0.2+8-LTS, mixed mode, sharing)

You can see the MaxHeapSize size and that its value is determined by ergonomic, meaning it’s calculated by JVM itself rather than manually specified.

The 125MB initial reference value mentioned above is generally used for JVM calculations. Let’s analyze this calculation process, starting with the MaxHeapSize calculation process:

The process involves the following parameters, and some deprecated parameters that will be converted to non-deprecated parameters:

MinRAMPercentage: Don’t be misled by the name, this parameter takes effect when available memory is relatively small, i.e., maximum heap memory usage is this parameter’s specified percentage of available memory, default is 50, i.e., 50%MaxRAMPercentage: Don’t be misled by the name, this parameter takes effect when available memory is relatively large, i.e., maximum heap memory usage is this parameter’s specified percentage of available memory, default is 25, i.e., 25%ErgoHeapSizeLimit: Through automatic calculation, the calculated maximum heap memory size doesn’t exceed this parameter’s specified size, default is 0 meaning no limitMinRAMFraction: Deprecated, if configured will be converted toMinRAMPercentagewith conversion relationship:MinRAMPercentage= 100.0 /MinRAMFraction, default is 2MaxRAMFraction: Deprecated, if configured will be converted toMaxRAMPercentagewith conversion relationship:MaxRAMPercentage= 100.0 /MaxRAMFraction, default is 4

Corresponding source code: https://github.com/openjdk/jdk/blob/jdk-21+3/src/hotspot/share/gc/shared/gc_globals.hpp:

product(double, MinRAMPercentage, 50.0, \

"Minimum percentage of real memory used for maximum heap" \

"size on systems with small physical memory size") \

range(0.0, 100.0) \

product(double, MaxRAMPercentage, 25.0, \

"Maximum percentage of real memory used for maximum heap size") \

range(0.0, 100.0) \

product(size_t, ErgoHeapSizeLimit, 0, \

"Maximum ergonomically set heap size (in bytes); zero means use " \

"MaxRAM * MaxRAMPercentage / 100") \

range(0, max_uintx) \

product(uintx, MinRAMFraction, 2, \

"Minimum fraction (1/n) of real memory used for maximum heap " \

"size on systems with small physical memory size. " \

"Deprecated, use MinRAMPercentage instead") \

range(1, max_uintx) \

product(uintx, MaxRAMFraction, 4, \

"Maximum fraction (1/n) of real memory used for maximum heap " \

"size. " \

"Deprecated, use MaxRAMPercentage instead") \

range(1, max_uintx) \

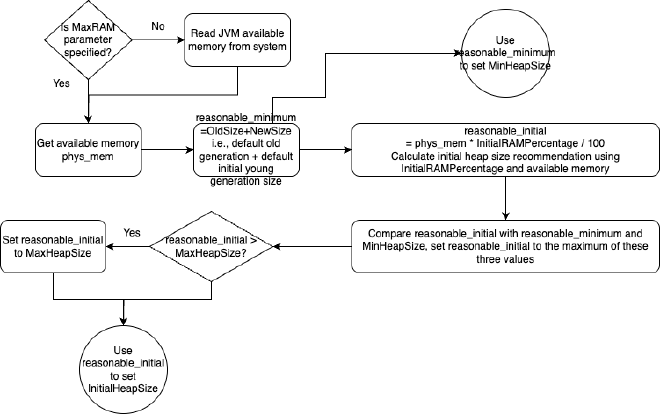

Then if we also haven’t set MinHeapSize and InitialHeapSize, they will also go through the following calculation process:

The process involves the following parameters, and some deprecated parameters that will be converted to non-deprecated parameters:

NewSize: Initial young generation size, preset value is around 1.3MB (1*13/10)OldSize: Old generation size, preset value is around 5.2MB (4*13/10)InitialRAMPercentage: Initial heap memory is this parameter’s specified percentage of available memory, default is 1.5625, i.e., 1.5625%InitialRAMFraction: Deprecated, if configured will be converted toInitialRAMPercentagewith conversion relationship:InitialRAMPercentage= 100.0 /InitialRAMFraction

Corresponding source code: https://github.com/openjdk/jdk/blob/jdk-21+3/src/hotspot/share/gc/shared/gc_globals.hpp:

product(size_t, NewSize, ScaleForWordSize(1*M), \

"Initial new generation size (in bytes)") \

constraint(NewSizeConstraintFunc,AfterErgo) \

product(size_t, OldSize, ScaleForWordSize(4*M), \

"Initial tenured generation size (in bytes)") \

range(0, max_uintx) \

product(double, InitialRAMPercentage, 1.5625, \

"Percentage of real memory used for initial heap size") \

range(0.0, 100.0) \

product(uintx, InitialRAMFraction, 64, \

"Fraction (1/n) of real memory used for initial heap size. " \

"Deprecated, use InitialRAMPercentage instead") \

range(1, max_uintx) \

3.4. Compressed Object Pointer Related Mechanism - UseCompressedOops#

3.4.1. Significance of Compressed Object Pointers#

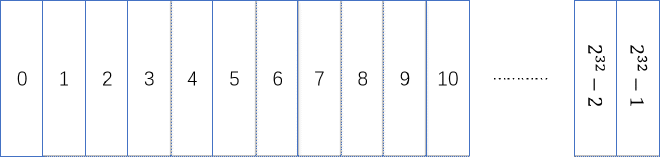

Most modern machines are 64-bit, and JVM has only provided 64-bit virtual machines since version 9. In JVM, an object pointer corresponds to the starting position of the virtual memory where the process stores this object, also 64-bit in size:

We know that for 32-bit addressing, it only supports addressing up to 4GB of memory, which might not be enough for current JVMs where heap size alone might exceed 4GB. So currently object pointers are generally 64-bit to support large memory. However, compared to 32-bit pointer addressing, performance degrades. We know CPUs can only process data in registers, and between registers and memory, there are many levels of CPU cache. Although memory is getting cheaper and larger, CPU cache hasn’t gotten larger, which means if using 64-bit pointer addressing compared to previous 32-bit, CPU cache can hold half the number of pointers.

Java is an object-oriented language, and the most frequent operations in JVM are operations on objects, such as loading an object’s field, storing an object’s field, all of which require accessing object pointers. So JVM wants to optimize object pointers as much as possible, which introduces compressed object pointers, allowing object pointers to remain 32-bit when conditions are met.

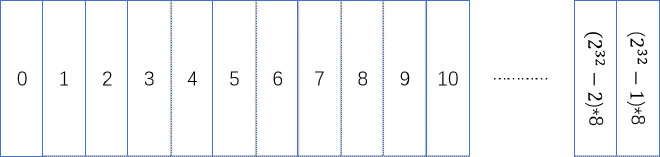

For 32-bit pointers, assuming each 1 represents 1 byte, then it can describe 0~2^32-1, these 2^32 bytes, which is 4 GB of virtual memory.

What if I let each 1 represent 8 bytes? That is, make this virtual memory 8-byte aligned, meaning when I use this memory, the minimum allocation unit is 8 bytes. For Java heap memory, this means an object’s occupied space must be a multiple of 8 bytes; if not enough, it will be padded to a multiple of 8 bytes to ensure alignment. This way it can describe at most 2^32 * 8 bytes, which is 32 GB of virtual memory.

This is the principle of compressed pointers. The related JVM parameter mentioned above is: ObjectAlignmentInBytes, which indicates how many bytes each object in the Java heap needs to be aligned to, i.e., how many bytes the heap is aligned to. Value range is 8 ~ 256, must be a power of 2, because powers of 2 can simplify many operations, for example, taking remainder with powers of 2 can be simplified to bitwise AND operations with powers of 2 minus 1, and multiplication and division can be simplified to shift operations.

If the configured maximum heap memory exceeds 32 GB (when JVM is 8-byte aligned), compressed pointers will become ineffective (actually not exceeding 32GB, it will become ineffective when slightly less than 32GB, there are other factors affecting this, which will be discussed in the next section). However, this 32 GB is related to byte alignment size, which is the size configured by -XX:ObjectAlignmentInBytes=8 (default is 8 bytes, meaning Java defaults to 8-byte alignment). If you configure -XX:ObjectAlignmentInBytes=16, then compressed pointers won’t become ineffective until maximum heap memory exceeds 64 GB. If you configure -XX:ObjectAlignmentInBytes=32, then compressed pointers won’t become ineffective until maximum heap memory exceeds 128 GB.

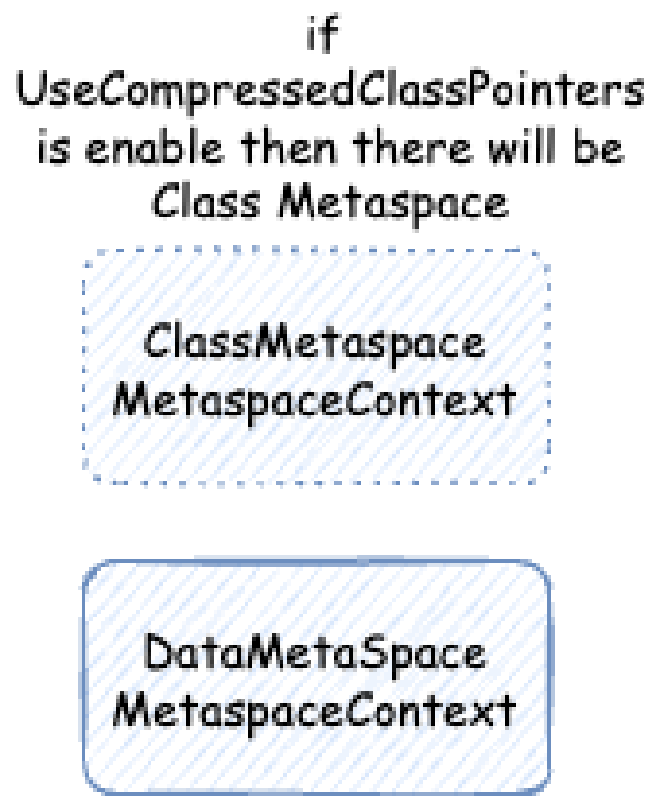

3.4.2. Evolution of Relationship Between Compressed Object Pointers and Compressed Class Pointers#

In older versions, UseCompressedClassPointers depended on UseCompressedOops, meaning if compressed object pointers weren’t enabled, compressed class pointers couldn’t be enabled either. But starting from Java 15 Build 23, UseCompressedClassPointers no longer depends on UseCompressedOops, and the two have become independent in most cases. Except when using JVM Compiler Interface (such as using GraalVM) on x86 CPUs. Reference JDK ISSUE: https://bugs.openjdk.java.net/browse/JDK-8241825 - Make compressed oops and compressed class pointers independent (x86_64, PPC, S390) and source code:

https://github.com/openjdk/jdk/blob/jdk-21%2B3/src/hotspot/cpu/x86/globalDefinitions_x86.hpp:#define COMPRESSED_CLASS_POINTERS_DEPENDS_ON_COMPRESSED_OOPS EnableJVMCIOn x86 CPU, whetherUseCompressedClassPointersdepends onUseCompressedOopsdepends on whether JVMCI is enabled. In default JVM releases, EnableJVMCI is falsehttps://github.com/openjdk/jdk/blob/jdk-21%2B3/src/hotspot/cpu/arm/globalDefinitions_arm.hpp:#define COMPRESSED_CLASS_POINTERS_DEPENDS_ON_COMPRESSED_OOPS falseOn ARM CPU,UseCompressedClassPointersdoesn’t depend onUseCompressedOopshttps://github.com/openjdk/jdk/blob/jdk-21%2B3/src/hotspot/cpu/ppc/globalDefinitions_ppc.hpp:#define COMPRESSED_CLASS_POINTERS_DEPENDS_ON_COMPRESSED_OOPS falseOn PPC CPU,UseCompressedClassPointersdoesn’t depend onUseCompressedOopshttps://github.com/openjdk/jdk/blob/jdk-21%2B3/src/hotspot/cpu/s390/globalDefinitions_s390.hpp:#define COMPRESSED_CLASS_POINTERS_DEPENDS_ON_COMPRESSED_OOPS falseOn S390 CPU,UseCompressedClassPointersdoesn’t depend onUseCompressedOops

3.4.3. Different Modes and Addressing Optimization Mechanisms of Compressed Object Pointers#

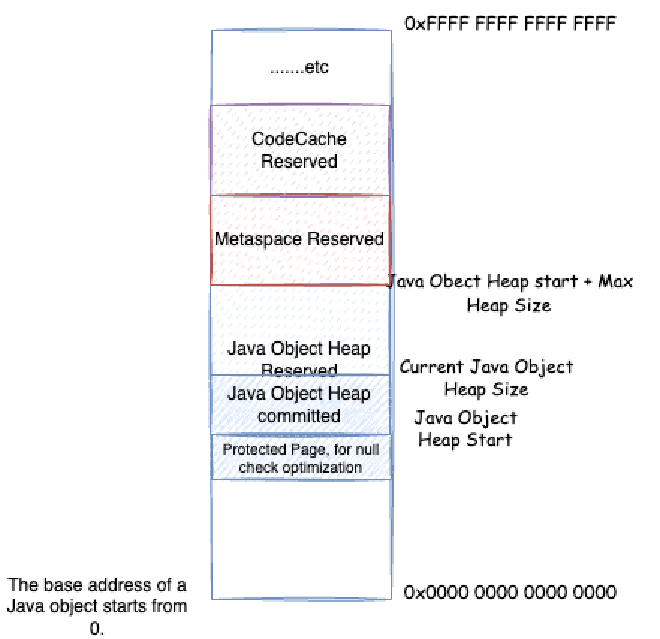

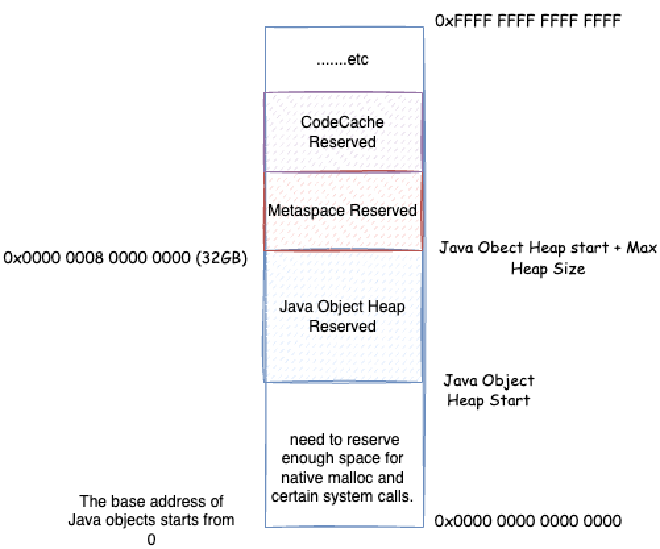

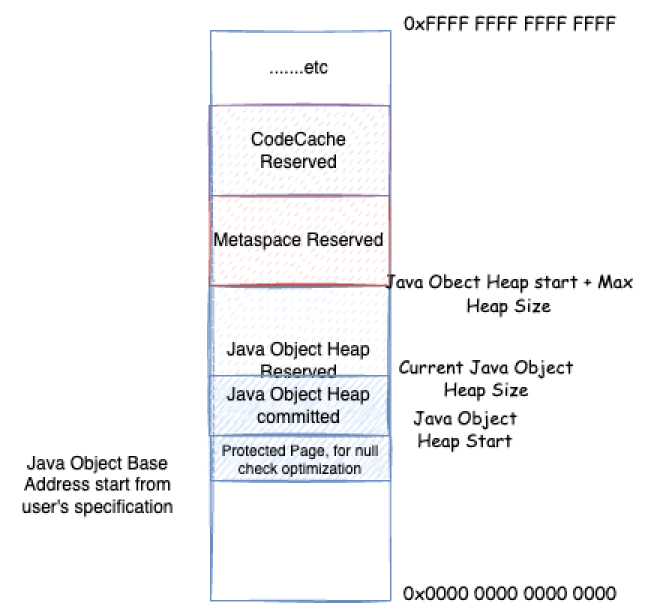

How do object pointers and compressed object pointers convert? Let’s think about some questions first. Through the analysis in Chapter 2, we know that each process has its own virtual address space, and some low-bit space starting from 0 is reserved space for the process’s system calls, for example, 0x0000 0000 0000 0000 ~ 0x0000 0000 0040 0000 is a reserved area that cannot be used

The space that processes can apply for is the native heap space shown in the figure above. So, the virtual memory space of JVM processes definitely won’t start from 0x0000 0000 0000 0000. Different operating systems have different starting points for this native heap space. We don’t care about the specific location here, we only know one thing: JVM needs to apply for memory starting from a certain point in virtual memory, and needs to reserve enough space for possible system call mechanisms, such as some malloc memory we saw in native memory tracking earlier, some of which are actually allocated in this reserved space. Generally, JVM will prioritize considering Java heap memory allocation in native heap, then allocate others in native heap, such as metaspace, code cache space, etc.

When JVM reserves and allocates Java heap space, it will reserve the maximum Java heap space size at once, then reserve and allocate other storage spaces based on this. After that, when allocating Java objects, it commits within the reserved Java heap memory space, then writes data to map physical memory to allocate Java objects. According to the Java heap size expansion and contraction strategy mentioned earlier, it decides whether to continue committing to occupy more physical memory or uncommit to release physical memory:

Java is an object-oriented language, and the most frequent execution in JVM is accessing these objects. In various JVM mechanisms, we must constantly consider how to optimize the speed of accessing these objects. For compressed object pointers, JVM has considered many optimizations. If we want to use compressed object pointers, we need to convert this 64-bit address to a 32-bit address. Then when reading object information pointed to by compressed object pointers, we need to parse this 32-bit address to a 64-bit address before addressing and reading. The conversion formula is as follows:

64-bit address = base address + (compressed object pointer << object alignment offset)compressed object pointer = (64-bit address - base address) >> object alignment offset

The base address is actually the starting point of object addresses. Note that this base address is not necessarily the starting address of the Java heap, as we’ll see later. The object alignment offset is related to the aforementioned ObjectAlignmentInBytes, for example, in the case of ObjectAlignmentInBytes=8, the object alignment offset is 3 (because 8 is 2 to the power of 3). We optimize this formula:

First, we consider removing the base address and object alignment offset, so compressed object pointers can be directly used as object addresses. When can this be done? That is when object addresses start from 0, and maximum heap memory + Java heap starting position is not greater than 4GB. Because in this case, the maximum address of objects in Java heap won’t exceed 4GB, so the range of compressed object pointers can directly represent all objects in Java heap. Compressed object pointers can be directly used as actual memory addresses for objects. Why is it maximum heap memory + Java heap starting position not greater than 4GB? Because from the previous analysis, we know that the space processes can apply for is native heap space. So, the Java heap starting position definitely won’t start from 0x0000 0000 0000 0000.

If maximum heap memory + Java heap starting position is greater than 4GB, the first optimization can’t be used, and object address offset can’t be avoided. But if we can ensure maximum heap memory + Java heap starting position is less than 32-bit * ObjectAlignmentInBytes, in the default case of ObjectAlignmentInBytes=8, which is 32GB, we can still make the base address equal to 0, so 64-bit address = (compressed object pointer << object alignment offset)

However, in the case of ObjectAlignmentInBytes=8, if maximum heap memory is too large, approaching 32GB, to ensure maximum heap memory + Java heap starting position is less than 32GB, the Java heap starting position would be close to 0, which obviously won’t work. So when maximum heap memory approaches 32GB, the second optimization above also becomes ineffective. But we can make Java heap start from an address completely disjoint from 32GB addresses, so addition can be optimized to bitwise OR operation, i.e., 64-bit address = base address | (compressed object pointer << object alignment offset)

Finally, in the case of ObjectAlignmentInBytes=8, if users specify the Java heap starting address themselves through HeapBaseMinAddress, and it intersects with 32GB addresses, and maximum heap memory + Java heap starting position is greater than 32GB, but maximum heap memory doesn’t exceed 32GB, then it can’t be optimized, and can only use 64-bit address = base address + (compressed object pointer << object alignment offset)

To summarize, the four modes we discussed above correspond to four modes of compressed object pointers in JVM (the following description is based on ObjectAlignmentInBytes=8, i.e., the default case):

32-bitcompressed pointer mode: Maximum heap memory + Java heap starting position not greater than 4GB (and Java heap starting position can’t be too small),64-bit address = compressed object pointerZero basedcompressed pointer mode: Maximum heap memory + Java heap starting position not greater than 32GB (and Java heap starting position can’t be too small),64-bit address = (compressed object pointer << object alignment offset)Non-zero disjointcompressed pointer mode: Maximum heap memory not greater than 32GB, due to ensuring Java heap starting position can’t be too small, maximum heap memory + Java heap starting position greater than 32GB,64-bit address = base address | (compressed object pointer << object alignment offset)Non-zero basedcompressed pointer mode: Users specify Java heap starting address themselves throughHeapBaseMinAddress, and it intersects with 32GB addresses, and maximum heap memory + Java heap starting position greater than 32GB, but maximum heap memory doesn’t exceed 32GB,64-bit address = base address + (compressed object pointer << object alignment offset)

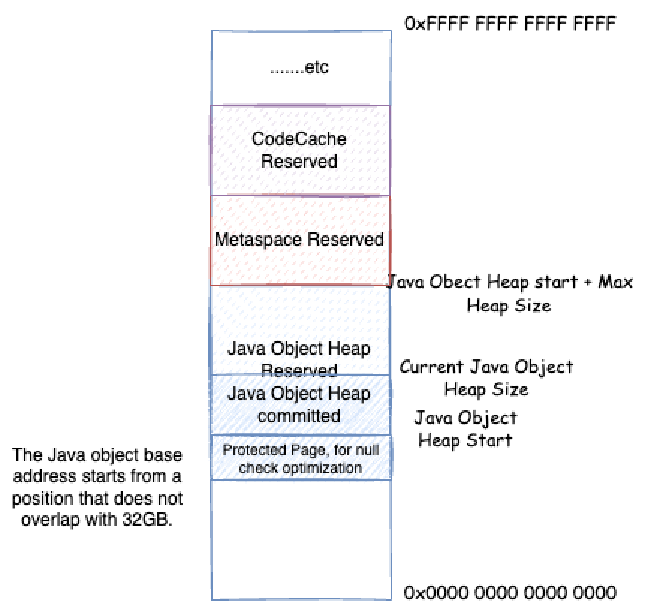

3.5. Why Reserve Page 0, Implementation of Compressed Object Pointer Null Check Elimination#

We learned earlier that compressed object pointers in JVM have four modes. For the two modes where addresses don’t start from 0, i.e., Non-zero disjoint and Non-zero based, the actual heap address doesn’t start from HeapBaseMinAddress, but has one page reserved, called page 0. This page doesn’t map actual memory, accessing addresses within this page will cause Segment Fault exceptions. So why reserve this page? Mainly for null check optimization, implementing null check elimination.

We all know that in Java, if you access member fields or methods of a null reference variable, it will throw NullPointerException. But how is this implemented? Our code doesn’t have explicit null checks that throw NullPointerException if null, but JVM can still throw this Java exception for null. We can guess that JVM might do such a check when accessing each reference variable for member fields or methods:

if (o == null) {

throw new NullPointerException();

}

However, if such a check is done every time each reference variable is accessed for member fields or methods, it’s very inefficient behavior. So, during interpretation execution, such checks might be done every time each reference variable is accessed for member fields or methods. After code runs a certain number of times and enters C1, C2 compilation optimization, these null checks might be eliminated. Possible eliminations include:

- Member method access to this can eliminate null checks for this.

- Code explicitly checks whether a variable is null, and this variable is not volatile

- There was already

a.something()type access earlier, andais not volatile, then latera.somethingElse()doesn’t need null checks anymore - And so on…

For those that can’t be eliminated, JVM tends to make an assumption, i.e., this variable probably won’t be null, JIT optimization first directly eliminates null checks. Java’s null corresponds to compressed object pointer value 0:

enum class narrowOop : uint32_t { null = 0 };

Accessing compressed object pointer address 0 actually means accessing the compressed object pointer base address we discussed earlier. In the four modes:

32-bitcompressed pointer mode: Accessing0x0000 0000 0000 0000, but we know earlier that0x0000 0000 0000 0000is a reserved area that can’t be accessed, will haveSegment Faulterror, sendingSIGSEGVsignalZero basedcompressed pointer mode: Accessing0x0000 0000 0000 0000, but we know earlier that0x0000 0000 0000 0000is a reserved area that can’t be accessed, will haveSegment Faulterror, sendingSIGSEGVsignalNon-zero disjointcompressed pointer mode: Accessing base address, but we know earlier that base address + JVM system page size is a reserved area that’s only Reserved but not committed, can’t be accessed, will haveSegment Faulterror, sendingSIGSEGVsignalNon-zero basedcompressed pointer mode: Accessing base address, but we know earlier that base address + JVM system page size is a reserved area that’s only Reserved but not committed, can’t be accessed, will haveSegment Faulterror, sendingSIGSEGVsignal

For non-compressed object pointer cases, it’s simpler. Non-compressed object pointer null is 0x0000 0000 0000 0000, accessing 0x0000 0000 0000 0000, but we know earlier that 0x0000 0000 0000 0000 is a reserved area that can’t be accessed, will have Segment Fault error, sending SIGSEGV signal.

We can see that if JIT optimization eliminates null checks, then when actually encountering null, there will be Segment Fault error, sending SIGSEGV signal. JVM has handling for SIGSEGV signals:

//This is code under AMD64 CPU

} else if (

//If signal is SIGSEGV

sig == SIGSEGV &&

//And it's SIGSEGV caused by encountering null where null checks were eliminated (we'll see many other places use SIGSEGV later)

MacroAssembler::uses_implicit_null_check(info->si_addr)

) {

// If it's SIGSEGV caused by encountering null, then we need to evaluate whether to continue eliminating null checks here

stub = SharedRuntime::continuation_for_implicit_exception(thread, pc, SharedRuntime::IMPLICIT_NULL);

}

JVM not only uses SIGSEGV signals for null check elimination, but also uses them in other places (such as the StackOverflowError implementation we’ll analyze in detail later). So, we need to judge by checking the address where the SIGSEGV signal occurred. If the address is in the ranges we listed above, then it’s SIGSEGV caused by encountering null where null checks were eliminated:

bool MacroAssembler::uses_implicit_null_check(void* address) {

uintptr_t addr = reinterpret_cast<uintptr_t>(address);

uintptr_t page_size = (uintptr_t)os::vm_page_size();

#ifdef _LP64

//If compressed object pointers are enabled

if (UseCompressedOops && CompressedOops::base() != NULL) {

//If there's a reserved page (page 0), start point is base address

uintptr_t start = (uintptr_t)CompressedOops::base();

//If there's a reserved page (page 0), end point is base address + page size

uintptr_t end = start + page_size;

//If address range is in page 0, then it's SIGSEGV caused by encountering null where null checks were eliminated

if (addr >= start && addr < end) {

return true;

}

}

#endif

//If in page 0 of the entire virtual space, then it's SIGSEGV caused by encountering null where null checks were eliminated

return addr < page_size;

}

Let’s substitute the 4 cases of compressed object pointers:

32-bitcompressed pointer mode: Accessing0x0000 0000 0000 0000, address is in page 0,uses_implicit_null_checkreturns trueZero basedcompressed pointer mode: Accessing0x0000 0000 0000 0000, address is in page 0,uses_implicit_null_checkreturns trueNon-zero disjointcompressed pointer mode: Accessing base address, address is in page 0,uses_implicit_null_checkreturns trueNon-zero basedcompressed pointer mode: Accessing base address, address is in page 0,uses_implicit_null_checkreturns true

For non-compressed object pointer cases, it’s simpler. Non-compressed object pointer null is 0x0000 0000 0000 0000, accessing base address, address is in page 0, uses_implicit_null_check returns true.

This way, we know that JIT might eliminate null checks, throwing NullPointerException through SIGSEGV signals. However, going through SIGSEGV signals requires system calls, and system calls are very inefficient behavior that we need to avoid as much as possible. But the assumption here is that it’s probably not null, so using system calls doesn’t matter. But if a place frequently has null, JIT will consider not optimizing this way, deoptimizing the code and recompiling, no longer eliminating null checks but using explicit null checks to throw.

Finally, we know that reserving page 0 and not mapping memory is actually to make accessing base address trigger Segment Fault. JVM will catch this signal, check whether the memory address that triggered this signal belongs to the first page, and if it does, JVM knows this is caused by a null object. However, from the above, we actually only need to not map the address corresponding to the base address, so why reserve an entire page? This is due to considerations of memory alignment and addressing access speed. Memory mapping to physical memory is all done in page units, so memory needs to be page-aligned.

3.6. Relationship Between Compressed OOPs and Heap Memory Initialization#

In the previous sections, we explained how the three key metrics (MinHeapSize, MaxHeapSize, InitialHeapSize) are calculated when not manually specified, but we didn’t cover compressed object pointers. When compressed object pointers are enabled, after heap memory limits are initialized, the system determines whether compressed object pointers should be enabled based on the parameters:

- First, determine the Java heap starting position:

- Step 1: In different operating systems and CPU environments, the default value of

HeapBaseMinAddressvaries. In most environments, it’s2GB. For example, in Linux x86 environments, checking the source code: https://github.com/openjdk/jdk/blob/jdk-21%2B3/src/hotspot/os_cpu/linux_x86/globals_linux_x86.hpp:define_pd_global(size_t, HeapBaseMinAddress, 2*G); - Set

DefaultHeapBaseMinAddressto the default value ofHeapBaseMinAddress, which is2GB - If the user specifies

HeapBaseMinAddressin startup parameters and it’s less thanDefaultHeapBaseMinAddress, setHeapBaseMinAddresstoDefaultHeapBaseMinAddress - Calculate the maximum heap size for compressed object pointer heap:

- Read the object alignment size parameter

ObjectAlignmentInBytes, default is 8 - Take the base-2 logarithm of

ObjectAlignmentInBytes, recorded asLogMinObjAlignmentInBytes - Left-shift 32 bits by

LogMinObjAlignmentInBytesto getOopEncodingHeapMax, which is the maximum heap size without considering reserved areas - If a reserved area is needed (in

Non-Zero Based DisjointandNon-Zero Basedmodes), subtract the reserved area (page 0 size) fromOopEncodingHeapMax - Read the current JVM’s configured maximum heap size (we analyzed how this is calculated earlier)

- If the JVM’s configured maximum heap is smaller than the compressed object pointer heap’s maximum heap size, and compressed object pointers aren’t explicitly disabled via JVM startup parameters, enable compressed object pointers. Otherwise, disable them.

- If compressed object pointers are disabled, and compressed class pointers strongly depend on compressed object pointers (as analyzed earlier), disable compressed class pointers as well

3.7. Using jol + jhsdb + JVM Logs to Verify Compressed Object Pointers and Java Heap#

Add the jol dependency:

<dependency>

<groupId>org.openjdk.jol</groupId>

<artifactId>jol-core</artifactId>

<version>0.16</version>

</dependency>

Write the code:

package test;

import org.openjdk.jol.info.ClassLayout;

public class TestClass {

// TestClass object contains only one field: next

private String next = new String();

public static void main(String[] args) throws InterruptedException {

// Create a local variable tt on the stack, pointing to a TestClass object created on the heap

final TestClass tt = new TestClass();

// Use jol to output the structure of the object pointed to by tt

System.out.println(ClassLayout.parseInstance(tt).toPrintable());

// Wait indefinitely to prevent program exit

Thread.currentThread().join();

}

}

3.7.1. Verifying 32-bit Compressed Pointer Mode#

Let’s first test the first compressed object pointer mode (32-bit), where the Java heap is located between 0x0000 0000 0000 0000 ~ 0x0000 0001 0000 0000 (0~4GB). Use the following startup parameters:

-Xmx32M -Xlog:coops*=debug

Where -Xlog:coops*=debug displays JVM logs with coops tags at debug level. This log shows the heap’s starting virtual memory location, reserved heap space size, and compressed object pointer mode.

After startup, check the log output:

[0.006s][debug][gc,heap,coops] Heap address: 0x00000000fe000000, size: 32 MB, Compressed Oops mode: 32-bit

test.TestClass object internals:

OFF SZ TYPE DESCRIPTION VALUE

0 8 (object header: mark) 0x0000000000000001 (non-biasable; age: 0)

8 4 (object header: class) 0x00c01000

12 4 java.lang.String TestClass.next (object)

Instance size: 16 bytes

Space losses: 0 bytes internal + 0 bytes external = 0 bytes total

The first log line tells us the heap starts at 0x0000 0000 fe00 0000, size is 32 MB, and compressed object pointer mode is 32-bit. Adding 32 MB to 0x0000 0000 fe00 0000 equals 4GB 0x0000 0001 0000 0000. This confirms our earlier conclusion that the Java heap reserves space starting from the boundary minus the maximum heap size. In this case, 0x0000 0000 0000 0000 ~ 0x0000 0000 fdff ffff memory is used for process system calls and native memory allocation.

The subsequent logs show jol’s object structure output. We can see this object contains a markword (0x0000000000000001), a compressed class pointer (0x00c01000), and the next field. Let’s use jhsdb to examine the process’s actual virtual memory content for verification.

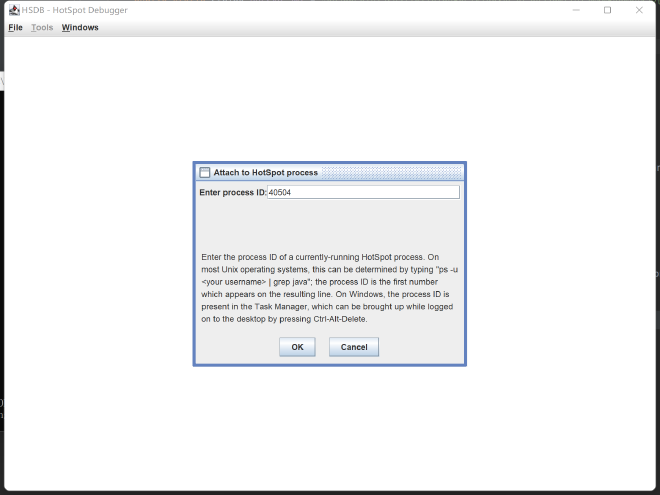

First, open jhsdb in GUI mode: jhsdb hsdb

Then “File” -> “Attach to Hotspot Process”, enter your JVM process ID:

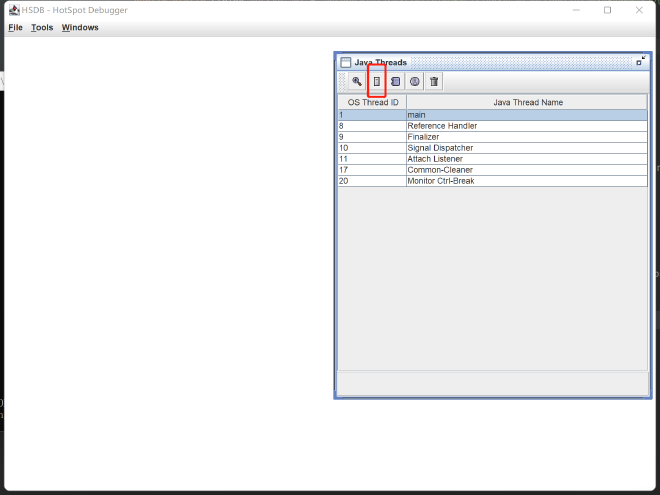

After successful attachment, you can see all threads of your JVM process in the panel. We’ll focus on the main thread. Click on the main thread, then click the red-boxed button (view thread stack memory):

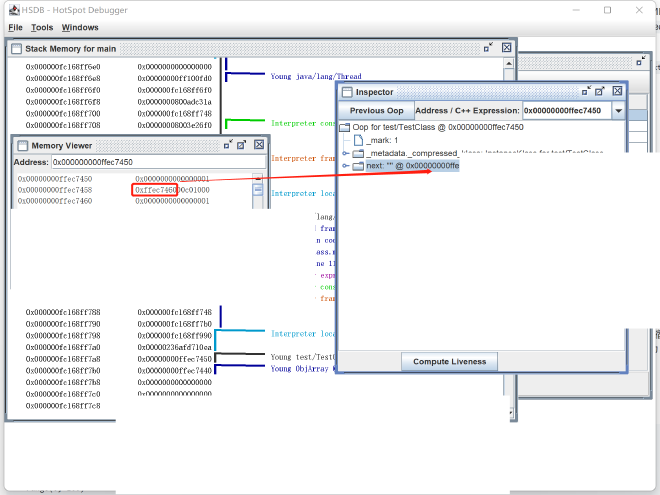

In the main thread stack memory, we can find the local variable tt from our code:

Here we can see the value stored in variable tt, which is actually the object’s address. Open “Tools” -> “Memory Viewer” to examine process virtual memory, and “Tools” -> “Inspector” to convert addresses to corresponding JVM C++ objects. Enter the local variable tt’s value seen in the main thread stack memory into both windows:

From the image above, we can see the object saved by tt starts at address 0x00000000ffec7450. The object header is 0x0000 0000 ffec 7450 ~ 0x0000 0000 ffec 7457, storing value 0x0000 0000 0000 0001, identical to jol’s output. The compressed class pointer is 0x0000 0000 ffec 7458 ~ 0x0000 0000 ffec 745b, storing value 0x00c0 1000, identical to jol’s compressed class pointer address. Next is the next field value, ranging 0x0000 0000 ffec 745c ~ 0x0000 0000 ffec 745f, storing value 0xffec 7460, with the corresponding string object’s actual address also being 0x0000 0000 ffec 7460. This perfectly matches the characteristics of 32-bit mode compressed class pointers we described earlier.

3.7.2. Verifying Zero based Compressed Pointer Mode#

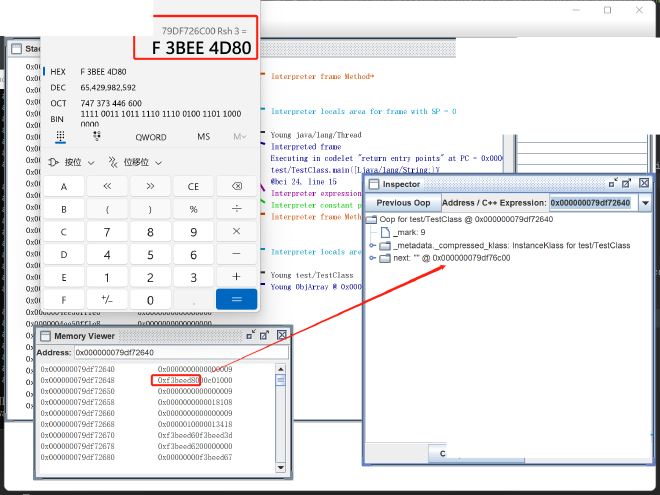

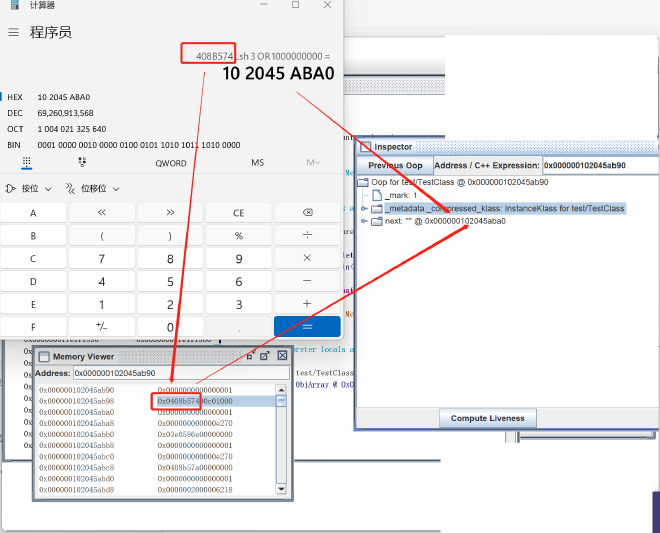

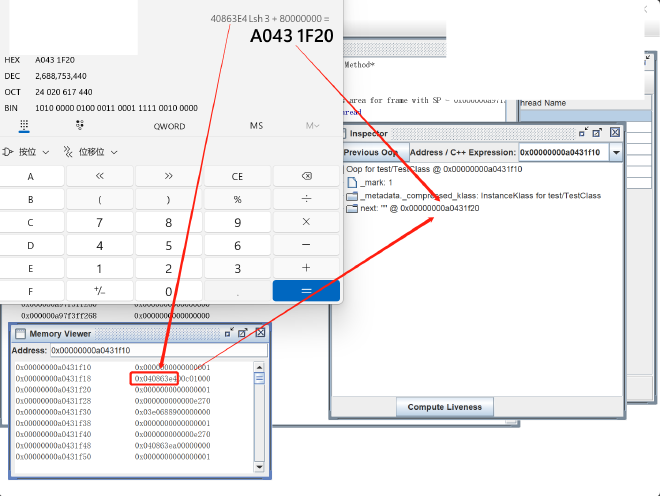

Next, let’s try Zero based mode using parameters -Xmx2050M -Xlog:coops*=debug (platform-dependent; check your platform’s default HeapBaseMinAddress size, typically 2G for x86, so specify a value greater than 4G - 2G = 2G). The log output is:

[0.006s][debug][gc,heap,coops] Heap address: 0x000000077fe00000, size: 2050 MB, Compressed Oops mode: Zero based, Oop shift amount: 3

test.TestClass object internals:

OFF SZ TYPE DESCRIPTION VALUE

0 8 (object header: mark) 0x0000000000000009 (non-biasable; age: 1)

8 4 (object header: class) 0x00c01000

12 4 java.lang.String TestClass.next (object)

Instance size: 16 bytes

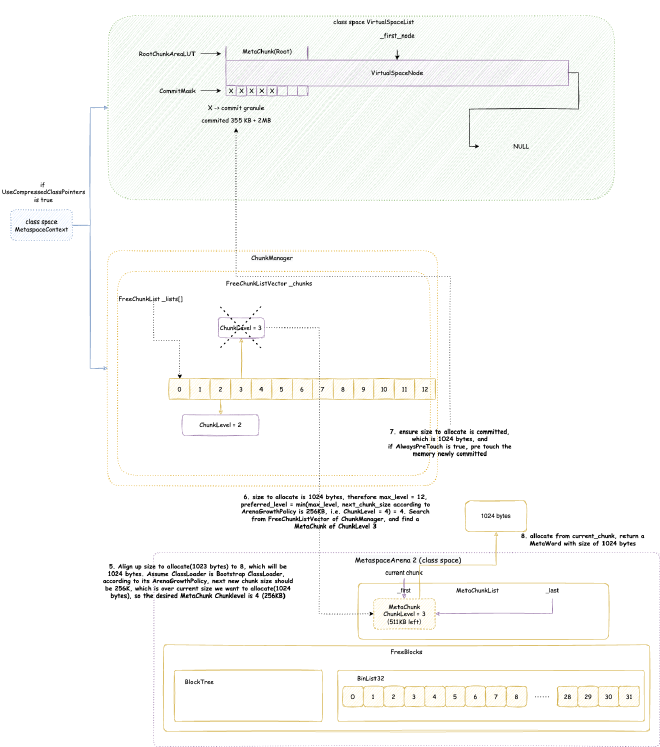

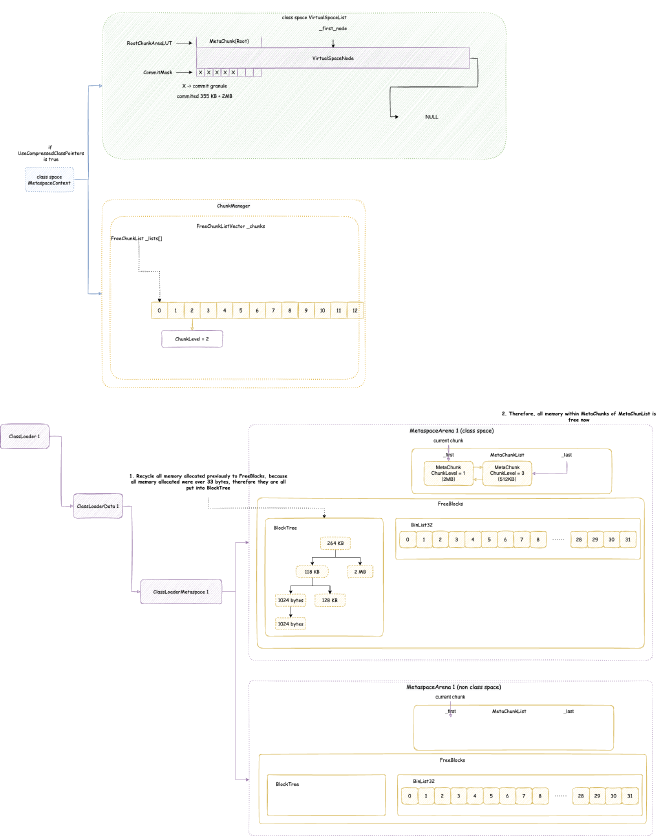

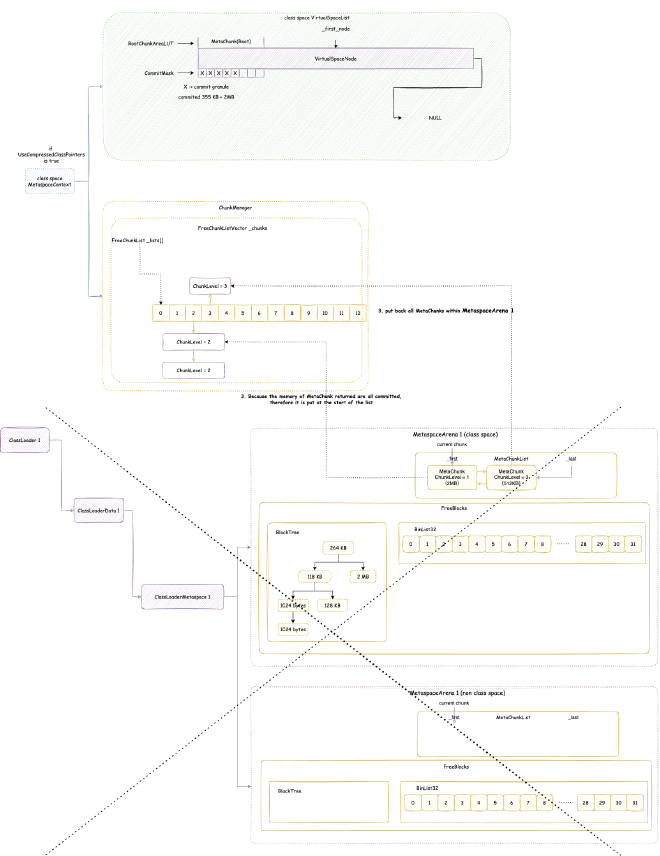

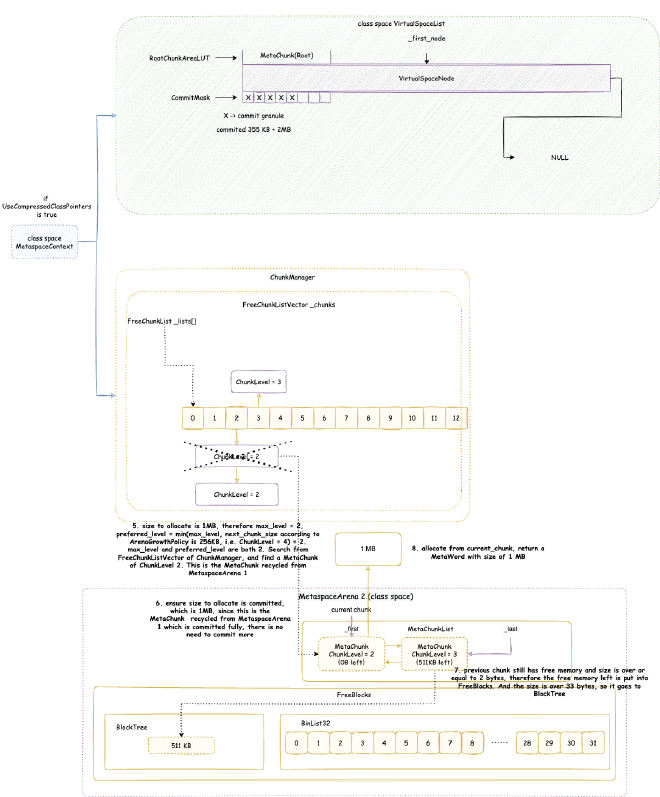

Space losses: 0 bytes internal + 0 bytes external = 0 bytes total