Today, our operations team reached out with a complex SQL query that was giving them trouble. The query was so complex that even running an EXPLAIN took forever to execute! Our backend team jumped in to help solve this SQL performance issue, and in the process, we stumbled upon a deeply hidden production problem that had been lurking in our system.

The Problematic SQL Query#

Here’s the query that started it all:

select

a.share_code,

a.generated_time,

a.share_user_id,

b.user_count,

b.order_count,

a.share_order_id,

b.rewarded_amount

from t_risk_share_code a,

(select count(distinct r.user_id) user_count,

count(distinct r.order_id) order_count,

s.rewarded_amount,

r.share_code

from t_order s,t_order_rel r

where r.order_id = s.id and r.type = 1 and r.share_code = 'recently_shared_order_code'

group by r.share_code) b

where a.share_code = b.share_code and a.type = 1

The first red flag was that even running EXPLAIN on this query was painfully slow, suggesting that some subqueries were actually being executed during the planning phase. So our first step was to break down the subquery and analyze it piece by piece:

select count(distinct r.user_id) user_count,

count(distinct r.order_id) order_count,

max(s.rewarded_amount),

r.share_code

from t_order s,t_order_rel r

where r.order_id = s.id and r.type = 1 and r.share_code = 'recently_shared_order_code'

group by r.share_code

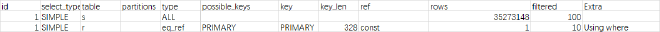

When we ran EXPLAIN on this isolated subquery, it executed quickly, but the results were puzzling:

Wait, what? Why was the t_order table doing a full table scan? This table has proper indexing, with id as the primary key!

Root Cause Analysis#

According to the official MySQL documentation, there are several reasons why MySQL might choose a full table scan:

Table is too small - Index usage isn’t worth it. But this wasn’t our case; both tables contain tens of millions of records.

No suitable indexes for WHERE or ON conditions - Also not our situation. Both tables have appropriate indexes for the WHERE and ON conditions (even though all conditions are in the WHERE clause here, MySQL would optimize this into JOIN ON + WHERE during execution).

Index analysis shows most table values would be hit - When MySQL determines through index analysis that most of the table’s pages would need to be loaded into memory anyway for the final data retrieval, it’s actually faster to just scan the entire table rather than first loading the index into memory to get matching rows, then loading most of the table pages anyway. This makes sense from a performance perspective. However, in our SQL, the

t_order_reltable would only return a few dozen records based on the WHERE conditions, andt_orderhas a 1-to-many relationship witht_order_rel, so this shouldn’t hit too many records.Low cardinality (distinctness) of the column - Cardinality is the number of distinct values divided by the total number of rows, with a maximum of 1. For InnoDB, this value isn’t calculated in real-time and can be inaccurate, especially when column values are updated causing row position changes within pages. However, for DISTINCT or primary key columns, this doesn’t need calculation as it’s always 1. Low cardinality is similar to situation #3 - too many rows would be hit. Since our SQL uses the primary key, this doesn’t apply here.

While none of these situations matched our case, here are some optimization strategies we use to avoid full table scans:

Regular statistics updates: To make the SQL execution plan analyzer more accurate (addressing situation #4), we periodically run

ANALYZE TABLEduring low-traffic periods to ensure the analyzer’s statistical data accuracy.Force index usage: Considering database sharding and the fact that database SQL execution plans aren’t always perfect and can choose wrong indexes, we generally add

FORCE INDEXto OLTP queries to enforce specific indexes. This is a common pitfall when using middleware-based sharding solutions (like sharding-jdbc) or native distributed databases (like TiDB).MySQL configuration: We set

--max-seeks-for-key = 10000(the default value is very large). This limits the number of rows the SQL execution plan analyzer thinks it might scan when using an index. The principle is simple, as shown in the source code:

double find_cost_for_ref(const THD *thd, TABLE *table, unsigned keyno,

double num_rows, double worst_seeks) {

// Compare analyzed scan rows with max_seeks_for_key, take the smaller value

// This means the SQL analyzer's conclusion won't exceed max_seeks_for_key for index scans

num_rows = std::min(num_rows, double(thd->variables.max_seeks_for_key));

if (table->covering_keys.is_set(keyno)) {

// We can use only index tree

const Cost_estimate index_read_cost =

table->file->index_scan_cost(keyno, 1, num_rows);

return index_read_cost.total_cost();

} else if (keyno == table->s->primary_key &&

table->file->primary_key_is_clustered()) {

const Cost_estimate table_read_cost =

table->file->read_cost(keyno, 1, num_rows);

return table_read_cost.total_cost();

} else

return min(table->cost_model()->page_read_cost(num_rows), worst_seeks);

}

This value shouldn’t be set too low, as it might cause the system to choose an index that scans the most rows when multiple indexes are available.

Using Optimizer Trace#

When EXPLAIN wasn’t enough for our analysis, we had to turn to optimizer_trace. We don’t use optimizer_trace as our first choice because it requires the SQL to execute completely before providing all useful information.

## Enable optimizer_trace

set session optimizer_trace="enabled=on";

## Execute the SQL

select .....

## Query trace results

SELECT trace FROM information_schema.OPTIMIZER_TRACE;

Through the trace results, we discovered that the actual executed SQL was:

SELECT

various_fields

FROM

`t_order_rel` `r`

JOIN `t_order` `s`

WHERE

(

( `r`.`order_id` = CONVERT ( `s`.`id` USING utf8mb4 ) )

AND ( `r`.`type` = 1 )

AND ( `r`.`share_code` = 'B2MTB6C' )

)

Aha! The two tables had different character encodings for their fields! This caused the JOIN ON condition to wrap a character encoding conversion: CONVERT ( s.id USING utf8mb4 ). We know that when fields are wrapped with functions in condition matching, indexes can’t be used. For example: date(create_time) < "2021-8-1" can’t use indexes, but create_time < "2021-8-1" can. Comparisons between different column types also can’t use indexes because MySQL automatically wraps them with type conversion functions. This is a common misuse caused by MySQL’s syntactic sugar.

The t_order_rel table had a different default encoding than other tables. Since some fields used emoji expressions, the entire table was created with utf8mb4 encoding by default. Moreover, this table was only used for logging purposes, with no OLTP business operations - only some OLAP scenarios used by operations team members. That’s why this issue went unnoticed for so long.

After fixing the field encoding, the SQL finally stopped doing full table scans. Going forward, we’ll be more careful about:

- Specifying default encoding at the database level, not specifying default encoding for tables, and only specifying encoding for individual fields that need special encoding

- Being mindful of type consistency on both sides of JOIN and WHERE comparisons to avoid preventing index usage

This was a great reminder that sometimes the most impactful issues are the ones hiding in plain sight!